28th Annual Computational Neuroscience Meeting: CNS*2019

K1 Brain networks, adolescence and schizophrenia

Ed Bullmore

University of Cambridge, Department of Psychiatry, Cambridge, United Kingdom

Correspondence: Ed Bullmore (etb23@cam.ac.uk)

BMC Neuroscience 2019, 20(Suppl 1):K1

The adolescent transition from childhood to young adulthood is an important phase of human brain development and a period of increased risk for incidence of psychotic disorders. I will review some of the recent neuroimaging discoveries concerning adolescent development, focusing on an accelerated longitudinal study of ~ 300 healthy young people (aged 14–25 years) each scanned twice using MRI. Structural MRI, including putative markers of myelination, indicates changes in local anatomy and connectivity of association cortical network hubs during adolescence. Functional MRI indicates strengthening of initially weak connectivity of subcortical nuclei and association cortex. I will also discuss the relationships between intra-cortical myelination, brain networks and anatomical patterns of expression of risk genes for schizophrenia.

K2 Neural circuits for mental simulation

Kenji Doya

Okinawa Institute of Science and Technology, Neural Computation Unit, Okinawa, Japan

Correspondence: Kenji Doya (doya@oist.jp)

BMC Neuroscience 2019, 20(Suppl 1):K2

The basic process of decision making is often explained by learning of values of possible actions by reinforcement learning. In our daily life, however, we rarely rely on pure trial-and-error and utilize any prior knowledge about the world to imagine what situation will happen before taking an action. How such “mental simulation” is implemented by neural circuits and how they are regulated to avoid delusion are exciting new topics of neuroscience. Here I report our works with functional MRI in humans and two-photon imaging in mice to clarify how action-dependent state transition models are learned and utilized in the brain.

K3 One network, many states: varying the excitability of the cerebral cortex

Maria V. Sanchez-Vives

IDIBAPS and ICREA, Systems Neuroscience, Barcelona, Spain

Correspondence: Maria V. Sanchez-Vives (msanche3@clinic.cat)

BMC Neuroscience 2019, 20(Suppl 1):K3

In the transition from deep sleep, anesthesia or coma states to wakefulness, there are profound changes in cortical interactions both in the temporal and the spatial domains. In a state of low excitability, the cortical network, both in vivo and in vitro, expresses it “default activity pattern”, slow oscillations [1], a state of low complexity and high synchronization. Understanding the multiscale mechanisms that enable the emergence of complex brain dynamics associated with wakefulness and cognition while departing from low-complexity, highly synchronized states such as sleep, is key to the development of reliable monitors of brain state transitions and consciousness levels during physiological and pathological states. In this presentation I will discuss different experimental and computational approaches aimed at unraveling how the complexity of activity patterns emerges in the cortical network as it transitions across different brain states. Strategies such as varying anesthesia levels or sleep/awake transitions in vivo, or progressive variations in excitability by variable ionic levels, GABAergic antagonists, potassium blockers or electric fields in vitro, reveal some of the common features of these different cortical states, the gradual or abrupt transitions between them, and the emergence of dynamical richness, providing hints as to the underlying mechanisms.

Reference

- 1.Sanchez-Vives, M, Marcello M, Maurizio M. Shaping the default activity pattern of the cortical network. Neuron 94.5 (2017): 993–1001.

K4 Neural circuits for flexible memory and navigation

Ila Fiete

Massachusetts Institute of Technology, McGovern Institute, Cambridge, United States of America

Correspondence: Ila Fiete (fiete@mit.edu)

BMC Neuroscience 2019, 20(Suppl 1):K4

I will discuss the problems of memory and navigation from a computational and functional perspective: What is difficult about these problems, which features of the neural circuit architecture and dynamics enable their solutions, and how the neural solutions are uniquely robust, flexible, and efficient.

F1 The geometry of abstraction in hippocampus and pre-frontal cortex

Silvia Bernardi1, Marcus K. Benna2, Mattia Rigotti3, Jérôme Munuera4, Stefano Fusi1, C. Daniel Salzman1

1Columbia University, Zuckerman Mind Brain Behavior Institute, New York, United States of America; 2Columbia University, Center for Theoretical Neuroscience, Zuckerman Mind Brain Behavior Institute, New York, NY, United States of America; 3IBM Research AI, Yorktown Heights, United States of America, 4Columbia University, Centre National de la Recherche Scientifique (CNRS), École Normale Supérieure, Paris, France

Correspondence: Marcus K. Benna (mkb2162@columbia.edu)

BMC Neuroscience 2019, 20(Suppl 1):F1

Abstraction can be defined as a cognitive process that finds a common feature—an abstract variable, or concept—shared by a number of examples. Knowledge of an abstract variable enables generalization to new examples based upon old ones. Neuronal ensembles could represent abstract variables by discarding all information about specific examples, but this allows for representation of only one variable. Here we show how to construct neural representations that encode multiple abstract variables simultaneously, and we characterize their geometry. Representations conforming to this geometry were observed in dorsolateral pre-frontal cortex, anterior cingulate cortex, and the hippocampus in monkeys performing a serial reversal-learning task. These neural representations allow for generalization, a signature of abstraction, and similar representations are observed in a simulated multi-layer neural network trained with back-propagation. These findings provide a novel framework for characterizing how different brain areas represent abstract variables, which is critical for flexible conceptual generalization and deductive reasoning.

F2 Signatures of network structure in timescales of spontaneous activity

Roxana Zeraati1, Nicholas Steinmetz2, Tirin Moore3, Tatiana Engel4, Anna Levina5

1University of Tübingen, International Max Planck Research School for Cognitive and System Neuroscience, Tübingen, Germany; 2University of Washington, Department of Biological Structure, Seattle, United States of America; 3Stanford University, Department of Neurobiology, Stanford, California, United States of America; 4Cold Spring Harbor Laboratory, Cold Spring Harbor, NY, United States of America; 5University of Tübingen, Tübingen, Germany

Correspondence: Roxana Zeraati (roxana.zeraati@tuebingen.mpg.de)

BMC Neuroscience 2019, 20(Suppl 1):F2

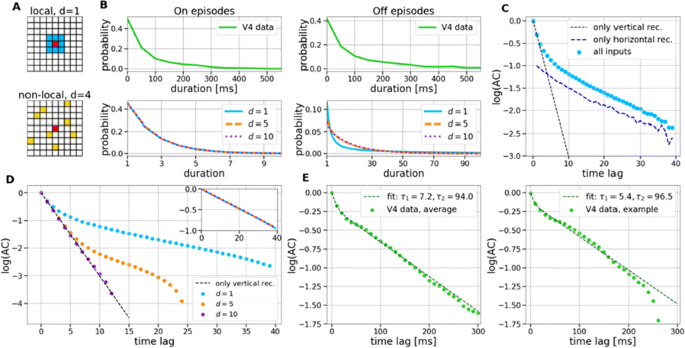

Cortical networks are spontaneously active. Timescales of these intrinsic fluctuations were suggested to reflect the network’s specialization for task-relevant computations. However, how these timescales arise from the spatial network structure is unknown. Spontaneous cortical activity unfolds across different spatial scales. On a local scale of individual columns, ongoing activity spontaneously transitions between episodes of vigorous (On) and faint (Off) spiking, synchronously across cortical layers. On a wider spatial scale, activity propagates as cascades of elevated firing across many columns, characterized by the branching ratio defined as the average number of units activated by each active unit. We asked, to what extent the timescales of spontaneous activity reflect the dynamics on these two spatial scales and the underlying network structure. To this end, we developed a branching network model capable of capturing both the local On-Off dynamics and the global activity propagation. Each unit in the model represents a cortical column, which has spatially structured connections to other columns (Fig. 1A). The columns stochastically transition between On and Off states. Transitions to On-state are driven by stochastic external inputs and by excitatory inputs from the neighboring columns (horizontal recurrent input). An On state can persist due to a self-excitation representing strong recurrent connections within one column (vertical recurrent input). On and Off episode durations in our model follow exponential distributions, similar to the On-Off dynamics observed in single cortical columns (Fig. 1B). We fixed the statistics of On-Off transitions and the global propagation, and studied the dependence of intrinsic timescales on the network spatial structure.

a Schematic representation of the model local and non-local connectivity. b Distributions of On-Off episode duration in V4 data and model. c Representation of different timescales in single columns AC. d Average AC of individual columns and the population activity (inset, with the same axes) for different network structures. e V4 data AC averaged over all recordings, and an example recording

We found that the timescales of local dynamics reflect the spatial network structure. In the model, activity of single columns exhibits two distinct timescales: one induced by the recurrent excitation within the column and another induced by interactions between the columns (Fig. 1C). The first timescale dominates dynamics in networks with more dispersed connectivity (Fig. 1A, non-local; Fig. 1D), whereas the second timescale is prominent in networks with more local connectivity (Fig. 1A, local; Fig. 1D). Since neighboring columns share many of their recurrent inputs, the second timescale is also evident in cross-correlations (CC) between columns, and it becomes longer with increasing distance between columns.

To test the model predictions, we analyzed 16-channel microelectrode array recordings of spiking activity from single columns in the primate area V4. During spontaneous activity, we observed two distinct timescales in columnar On-Off fluctuations (Fig. 1E). Two timescales were also present in CCs of neural activity on different channels within the same column. To examine how timescales depend on horizontal cortical distance, we leveraged the fact that columnar recordings generally exhibit slight horizontal shifts due to variability in the penetration angle. As a surrogate for horizontal displacements between pairs of channels, we used distances between centers of their receptive fields (RF). As predicted by the model, the second timescale in CCs became longer with increasing RF-center distance. Our results suggest that timescales of local On-Off fluctuations in single cortical columns provide information about the underlying spatial network structure of the cortex.

F3 Internal bias controls phasic but not delay-period dopamine activity in a parametric working memory task

Néstor Parga1, Stefania Sarno1, Manuel Beiran2, José Vergara3, Román Rossi-Pool3, Ranulfo Romo3

1Universidad Autónoma Madrid, Madrid, Spain; 2Ecole Normale Supérieure, Department of Cognitive Studies, Paris, France; 3Universidad Nacional Autónoma México, Instituto de Fisiología Celular, México DF, Mexico

Correspondence: Néstor Parga (nestor.parga@uam.es)

BMC Neuroscience 2019, 20(Suppl 1):F3

Dopamine (DA) has been implied in coding reward prediction errors (RPEs) and in several other phenomena such as working memory and motivation to work for reward. Under uncertain stimulation conditions DA phasic responses to relevant task cues reflect cortical perceptual decision-making processes, such as the certainty about stimulus detection and evidence accumulation, in a way compatible with the RPE hypothesis [1, 2]. This suggests that the midbrain DA system receives information from cortical circuits about decision formation and transforms it into an RPE signal. However, it is not clear how DA neurons behave when making a decision involves more demanding cognitive features, such as working memory and internal biases, or how they reflect motivation under uncertain conditions. To advance knowledge on these issues we have recorded and analyzed the firing activity of putatively midbrain DA neurons, while monkeys discriminated the frequencies of two vibrotactile stimuli delivered to one fingertip. This two-interval forced choice task, in which both stimuli were selected randomly in each trial, has been widely used to investigate perception, working memory and decision-making in sensory and frontal areas [3]; the current study adds to this scenario possible roles of midbrain DA neurons.

We found that the DA responses to the stimuli were not monotonically tuned to their frequency values. Instead they were controlled by an internally generated bias (contraction bias). This bias induced a subjective difficulty that modulated those responses as well as the accuracy and the response times (RTs). A Bayesian model for the choice explained the bias and gave a measure of the animal’s decision confidence, which also appeared modulated by the bias. We also found that the DA activity was above baseline throughout the delay (working memory) period. Interestingly, this activity was neither tuned to the first frequency nor controlled by the internal bias. While the phasic responses to the task events could be described by a reinforcement learning model based on belief states, the ramping behavior exhibited during the delay period could not be explained by standard models. Finally, the DA responses to the stimuli in short-RT trials and long-RTs trials were significantly different; interpreting the RTs as a measure of motivation, our analysis indicated that motivation affected strongly the responses to the task events but had only a weak influence on the DA activity during the delay interval. To summarize, our results show for the first time that an internal phenomenon (the bias) can control the DA phasic activity similar to the way physical differences in external stimuli do. We also encountered a ramping DA activity during the working memory period, independent of the memorized frequency value. Overall, our study supports the notion that delay and phasic DA activities accomplish quite different functions.

References

- 1.Sarno S, de Lafuente V, Romo R, Parga N. Dopamine reward prediction error signal codes the temporal evaluation of a perceptual decision report. PNAS. 201712479 (2017)

- 2.Lak A, Nomoto K, Keramati M, Sakagami M, Kepecs A. Midbrain dopamine neurons signal belief in choice accuracy during a perceptual decision. Curr Bio 27, 821–832 (2017)

- 3.Romo R, Brody CD, Hernández A, Lemus L. Neuronal correlates of parametric working memory in the prefrontal cortex. Nature 399, 470–473 (1999)

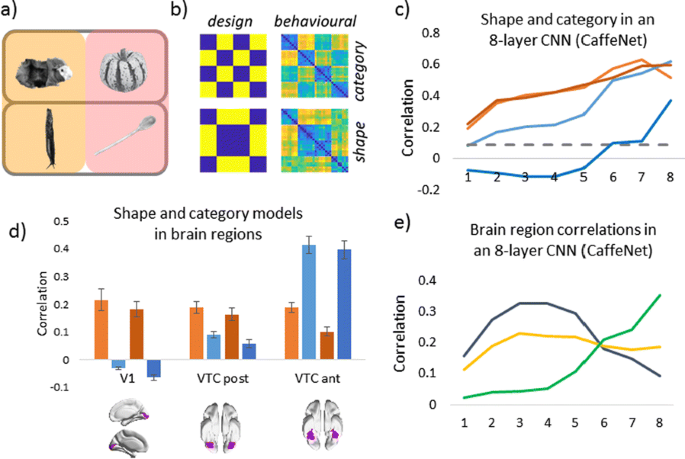

O1 Representations of dissociated shape and category in deep Convolutional Neural Networks and human visual cortex

Astrid Zeman, J Brendan Ritchie, Stefania Bracci, Hans Op de Beeck

KULeuven, Brain and Cognition, Leuven, Belgium

Correspondence: Astrid Zeman (astrid.zeman@kuleuven.be)

BMC Neuroscience 2019, 20(Suppl 1):O1

Deep Convolutional Neural Networks (CNNs) excel at object recognition and classification, with accuracy levels that now exceed humans [1]. In addition, CNNs also represent clusters of object similarity, such as the animate-inanimate division that is evident in object-selective areas of human visual cortex [2]. CNNs are trained using natural images, which contain shape and category information that is often highly correlated [3]. Due to this potential confound, it is therefore possible that CNNs rely upon shape information, rather than category, to classify objects. We investigate this possibility by quantifying the representational correlations of shape and category along the layers of multiple CNNs, with human behavioural ratings of these two factors, using two datasets that explicitly orthogonalize shape from category [3, 4] (Fig. 1a, b, c). We analyse shape and category representations along the human ventral pathway areas using fMRI (Fig. 1d) and measure correlations between artificial with biological representations by comparing the output from CNN layers with fMRI activation in ventral areas (Fig. 1e).

Shape and category models in CNNs vs the brain. a Example stimuli b Design and behavioral models c Shape (orange) and category (blue) correlations in CNNs. Behavioral (darker) and design (lighter) models. Only one CNN shown. d Shape (orange) and category (blue) correlations in ventral brain regions. e V1 (blue), posterior (yellow) and anterior (green) VTC correlated with CNN layers

First, we find that CNNs encode object category independently from shape, which peaks at the final fully connected layer for all network architectures. At the initial layer of all CNNs, shape is represented significantly above chance in the majority of cases (94%), whereas category is not. Category information only increases above the significance level in the final few layers of all networks, reaching a maximum at the final layer after remaining low for the majority of layers. Second, by using fMRI to analyse shape and category representations along the ventral pathway, we find that shape information decreases from early visual cortex (V1) to the anterior portion of ventral temporal cortex (VTC). Conversely, category information increases from low to high from V1 to anterior VTC. This two-way interaction is significant for both datasets, demonstrating that this effect is evident for both low-level (orientation dependent) and high-level (low vs high aspect ratio) definitions of shape. Third, comparing CNNs with brain areas, the highest correlation with anterior VTC occurs at the final layer of all networks. V1 correlations reach a maximum prior to fully connected layers, at early, mid or late layers, depending upon network depth. In all CNNs, the order of maximum correlations with neural data corresponds well with the flow of visual information along the visual pathway. Overall, our results suggest that CNNs represent category information independently from shape, similarly to human object recognition processing.

References

- 1.He K, Zhang X, Ren S, Sun J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. 2015 IEEE International Conference on Computer Vision (ICCV), Santiago 2015, pp 1026–1034.

- 2.Khaligh-Razavi S-M, Kriegeskorte N. Deep Supervised, but Not Unsupervised, Models May Explain IT Cortical Representation. PLoS Computational Biology 2014, 10(11), e1003915.

- 3.Bracci S, Op de Beeck H. Dissociations and Associations between Shape and Category. J Neurosci 2016, 36(2), 432–444.

- 4.Ritchie JB, Op de Beeck H. Using neural distance to predict reaction time for categorizing the animacy, shape, and abstract properties of objects. BioRxiv 2018. Preprint at: https://doi.org/10.1101/496539

No hay comentarios:

Publicar un comentario