P29 Learning the receptive field properties of complex cells in V1

Yanbo Lian1, Hamish Meffin2, David Grayden1, Tatiana Kameneva3, Anthony Burkitt1

1University of Melbourne, Department of Biomedical Engineering, Melbourne, Australia; 2University of Melbourne, Department of Optometry and Visual Science, Melbourne, Australia; 3Swinburne University of Technology, Telecommunication Electrical Robotics and Biomedical Engineering, Hawthorn, Australia

Correspondence: Anthony Burkitt (aburkitt@unimelb.edu.au)

BMC Neuroscience 2019, 20(Suppl 1):P29

There are two distinct classes of cells in the visual cortex: simple cells and complex cells. One defining feature of complex cells is their phase invariance, namely that they respond strongly to oriented bar stimuli with a preferred orientation but with a wide range of phases. A classical model of complex cells is the energy model, in which the responses are the sum of the squared outputs of two linear phase-shifted filters. Although the energy model can capture the observed phase invariance of complex cells, a recent study has shown that complex cells have a great diversity and only a subset can be characterized by the energy model [1]. From the perspective of a hierarchical structure, it is still unclear how a complex cell pools input from simple cells, which simple cells should be pooled, and how strong the pooling weights should be. Most existing models overlook many biologically important details, e.g., some models assume a quadratic nonlinearity of the linear filtered simple cell activity, use pre-determined weights between simple and complex cells, or use artificial learning rules. Hosoya&Hyvarinen [2] applied strong dimension reduction in pooling simple cell receptive fields trained using independent component analysis. Their approach involves pooling simple cells, but the weights connecting simple and complex cells are not learned and thus it is unclear how this can be biophysically implemented.

We propose a biologically plausible learning model for complex cells that pools inputs from simple cells. The model is a 3-layer network with rate-based neurons that describes the activities of LGN cells (layer 1), V1 simple cells (layer 2), and V1 complex cells (layer 3). The first two layers implement a recently proposed simple cell model that is biologically plausible and accounts for many experimental phenomena [3]. The dynamics of the complex cells involves the linear summation of responses of simple cells that are connected to complex cells, taken in our model to be excitatory. Connections between LGN and simple cells are learned based on Hebbian and anti-Hebbian plasticity, similar to that in our previous work [3]. For connections between simple and complex cells that are learned using natural images as input, a modified version of the Bienenstock, Cooper, and Munro (BCM) rule [4] is investigated.

Our results indicate that the learning rule can describe a diversity of individual complex cells, similar to that observed experimentally, that pool inputs from simple cells with similar orientation but differing phases. Preliminary results support the hypothesis that normalized BCM [5] can lead to competition between complex cells and they thereby pool inputs from different groups of simple cells. In summary, this study provides a plausible explanation for how complex cells can be learned using biologically plasticity mechanisms.

References

- 1.Almasi A. An investigation of spatial receptive fields of complex cells in the primary visual cortex Doctoral dissertation 2017.

- 2.Hosoya H, Hyvärinen A. Learning visual spatial pooling by strong pca dimension reduction. Neural computation 2016 Jul;28(7):1249–64.

- 3.Lian Y, Meffin H, Grayden DB, Kameneva T, Burkitt AN. Towards a biologically plausible model of LGN-V1 pathways based on efficient coding. Frontiers in Neural Circuits 2019;13:13.

- 4.Bienenstock EL, Cooper LN, Munro PW. Theory for the development of neuron selectivity: orientation specificity and binocular interaction in visual cortex. Journal of Neuroscience 1982 Jan 1;2(1):32–48.

- 5.Willmore BD, Bulstrode H, Tolhurst DJ. Contrast normalization contributes to a biologically-plausible model of receptive-field development in primary visual cortex (V1). Vision research 2012 Feb 1;54:49–60.

P30 Bursting mechanisms based on interplay of the Na/K pump and persistent sodium current

Gennady Cymbalyuk1, Christian Erxleben2, Angela Wenning-Erxleben2, Ronald Calabrese2

1Georgia State University, Neuroscience Institute, Atlanta, GA, United States of America; 2Emory University, Department of Biology, Atlanta, GA, United States of America

Correspondence: Gennady Cymbalyuk (gcymbalyuk@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):P30

Central Pattern Generators produce robust bursting activity to control vital rhythmic functions like breathing and leech heart beating under variable environmental and physiological conditions. Their functional operation under different physiological parameters yields distinct dynamic mechanisms based on the dominance of interactions within different subsets of inward and outward currents. Recent studies provide evidence that the Na+/K+ pump contributes to the dynamics of neurons and is a target of neuromodulation [1, 2]. Recently, we have described a complex interaction of the pump current and h-current that plays a role in the dynamics of rhythmic neurons in the leech heartbeat CPG, where the basic building blocks are half-center oscillators (HCOs): pairs of mutually inhibitory HN interneurons producing alternating bursting activity. In the presence of h-current, application of the H+/Na+ antiporter monensin, which stimulates the pump by diffusively increasing the intracellular Na+ concentration [3], dramatically decreases the period of a leech heartbeat HCO by decreasing both the burst duration (BD) and interburst interval (IBI). If h-current is blocked then monensin decreases BD but lengthens IBI so that there is no net change of period with respect to control. This mechanism shows how each phase of bursting, BD and IBI, can be independently controlled by interaction of the pump and h-currents.

We implemented our model [3] into a hybrid system. We investigated a potential role played by the persistent Na+ current (IP), sodium current which does not inactivate. Our hybrid-system allowed us to upregulate or downregulate the Na+/K+ pump and key ionic currents in real-time models and living neurons. We were able to tune the real time model to support functional-like bursting. We investigated how the variation of the basic physiological parameters like conductance and voltage of half-activation of IP and strength of the Na+/K+ pump affect bursting characteristics in single neurons and HCO. We show that interaction of IP and Ipump constitutes a mechanism which is sufficient to support endogenous bursting activity. We show that this mechanism can reinstate robust bursting regime in HN interneurons recorded intracellularly in ganglion 7. Due to interaction of IPand Ipump, the increase of the maximal conductance of IP can shorten the burst duration and expand the interburst interval. Our data also suggest that the functional alternating bursting regime of the HCO network requires the neurons to be in the parametric vicinity of or in the state of the endogenous bursting. We investigated underlying interaction of the IP and Ipump in a simple 2D model describing dynamics of the membrane potential and intracellular Na+ concentration through instantaneous IP and IPump.

Acknowledgements: Supported by NINDS 1 R01 NS085006 to RLC and1 R21 NS111355 to RLC and GSC

References

- 1.Tobin AE, Calabrese R. Myomodulin increases Ih and inhibits the NA/K pump to modulate bursting in leech heart interneurons. Journal of neurophysiology 2005 Dec;94(6):3938–50.

- 2.Picton LD, Nascimento F, Broadhead MJ, Sillar KT, Miles GB. Sodium pumps mediate activity-dependent changes in mammalian motor networks. Journal of Neuroscience 2017 Jan 25;37(4):906–21.

- 3.Kueh D, Barnett W, Cymbalyuk G, Calabrese R. Na(+)/K(+) pump interacts with the h-current to control bursting activity in central pattern generator neurons of leeches. Elife, 2016. Sep 2;5:e19322.

P31 Balanced synaptic strength regulates thalamocortical transmission of informative frequency bands

Alberto Mazzoni1, Matteo Saponati2, Jordi Garcia-Ojalvo3, Enrico Cataldo2

1Scuola Superiore Sant’Anna Pisa, The Biorobotics Institute, Pisa, Italy; 2University of Pisa, Department of Physics, Pisa, Italy; 3Universitat Pompeu Fabra, Department of Experimental and Health Sciences, Barcelona, Spain

Correspondence: Alberto Mazzoni (a.mazzoni@santannapisa.it)

BMC Neuroscience 2019, 20(Suppl 1):P31

The thalamus receives information about the external world from the peripheral nervous system and conveys it to the cortex. This is not a passive process: the thalamus gates and selects sensory streams through an interplay with its internal activity, and the inputs from the thalamus, in turn, interact in a non-linear way with the functional architecture of the primary sensory cortex. Here we address the network mechanisms by which the thalamus selectively transmits informative frequency bands to the cortex. In particular, spindle oscillations (about 10 Hz) dominate thalamic activity during sleep but are present in the thalamus also during wake [1, 2], and in the awake state are actively filtered out by thalamocortical transmission [3].

To reproduce and understand the filtering mechanism underlying the lack of thalamocortical transmission of spindle oscillations we developed an integrated adaptive exponential integrate-and-fire model of the thalamocortical network. The network is composed by 500 neurons for the thalamus and 5000 neurons for the cortex, with a 1:1 and 1:4 inhibitory to excitatory ratio respectively. We generated the local field potential (LFP) associated to the two networks to compare our simulation with experimental results [3].

Weobserve, in agreement withexperimental data, both delta and theta oscillations in the cortex, but while the cortical delta band is phase locked to thethalamic delta band [4]– even when we take into account the presence of strong colored cortical noise -, the cortical theta fluctuations are not entrained by thalamocortical spindles. Our simulations show that the spindleLFPoscillationsobserved in experimental recordings are way more pronounced in reticular cells than in thalamocortical relays, thus reducing their potential impact on the cortex. More interestingly, we found that the resonance dynamics in the corticalgamma band, generated by the fast interplay between excitation and inhibition, selectively dampens frequencies in the range of spindle oscillations. Finally, by parametrically varying the properties of thalamocortical connections, we found that the transmission of informative frequency bands depends on the balance of the strength of thalamocortical connections toward excitatory and inhibitory neurons in the cortex, coherently with experimental results [5]. Our results pave the way toward an integrated view of the processing of sensory streams from the periphery system to the cortex, and toward in silico design of thalamic neural stimulation.

References

- 1.Krishnan GP, Chauvette S, Shamiee I et al. Cellular and neurochemical basis of sleep stages in the thalamocortical network, eLife 2016, e18607.

- 2.Barardi A, Garcia-Ojalvo J, Mazzoni A. Transition between Functional Regimes in an Integrate-And-Fire Network Model of the Thalamus. PLoS One 2016, e0161934.

- 3.Bastos AM, Briggs F, Alitto HJ, et al. Simultaneous Recordings from the Primary Visual Cortex and Lateral Geniculate Nucleus Reveal Rhythmic Interactions and a Cortical Source for Gamma-Band Oscillations. Journal of Neuroscience 2014, 7639–7644.

- 4.Lewis LD, Voigts J, Flores FJ, et al. Thalamic reticular nucleus induces fast and local modulation of arousal state. eLife 2015, e08760.

- 5.Sedigh-Sarvestani M, Vigeland L, Fernandez-Lamo I, et al. Intracellular, in vivo, dynamics of thalamocortical synapses in visual cortex. Journal of Neuroscience 2017,5250–5262.

P32 Modeling gephyrin dependent synaptic transmission pathways to understand how gephyrin regulates GABAergic synaptic transmission

Carmen Alina Lupascu1, Michele Migliore1, Annunziato Morabito2, Federica Ruggeri2, Chiara Parisi2, Domenico Pimpinella2, Rocco Pizzarelli2, Giovanni Meli2, Silvia Marinelli2, Enrico Cherubini2, Antonino Cattaneo2

1Institute of Biophysics, National Research Council, Italy; 2European Brain Research Institute (EBRI), Rome, Italy

Correspondence: Carmen Alina Lupascu (carmen.lupascu@pa.ibf.cnr.it)

BMC Neuroscience 2019, 20(Suppl 1):P32

At inhibitory synapses, GABAergic signaling controls dendritic integration, neural excitability, circuit reorganization and fine tuning of network activity. Among different players, the tubulin-binding protein gephyrin plays a key role in anchoring GABAA receptors to synaptic membranes.

For its properties gephyrin is instrumental in establishing and maintaining a proper excitatory (E)/inhibitory (I) balance necessary for the correct functioning of neuronal networks. A disruption of the E/I balance is thought to be at the origin of several neuropsychiatric disorders including epilepsy, schizophrenia, autism.

In previous studies, the functional role of gephyrin on GABAergic signaling has been studied at post-translational level, using recombinant gephyrin-specific single chain antibody fragments (scFv-gephyrin) containing a nuclear localization signal able to remove endogenous gephyrin from GABAA receptor clusters retargeting it to the nucleus [2]. The reduced accumulation of gephyrin at synapses led to a significant reduction in amplitude and frequency of spontaneous and miniature inhibitory postsynaptic currents (sIPSCs and mIPSCs). This reduction is associated with a decrease in VGAT (the vesicular GABA transporter) and in neuroligin 2 (NLG2), a protein that ensures the cross-talk between the post- and presynaptic sites. Over-expressing NLG2 in gephyrin deprived neurons rescued GABAergic but not glutamatergic innervation, suggesting that the observed changes in the latter were not due to a homeostatic compensatory mechanism. These results suggest a key role of gephyrin in regulating trans-synaptic signaling at inhibitory synapses.

Here, the effects of two different intrabodies against gephyrin have been tested on spontaneous and miniature GABAA-mediated events obtained from cultured hippocampal and cortical neurons. Experimental findings have been used to develop a computational model describing the key role of gephyrin in regulating transynaptic signalingat inhibitory synapses. This represents a further application of a general procedure to study subcellular models of transsynaptic signaling at inhibitory synapses [1]. In this poster we will discuss the statistically significant differences found between the model parameters under control or gephyrin block condition. All computational procedures were carried out using an integrated NEURON and Python parallel code on different systems (JURECA machines, Julich, Germany; MARCONI machine, Cineca, Italy and Neuroscience Gateway, San Diego, USA). The model can be downloaded from the model catalog available on the Collaboratory Portal of Human Brain Project (HBP) (https://collab.humanbrainproject.eu/#/collab/1655/nav/75901?state = model.9f89bbcd-e045-4f1c-97e9-3da5847356c2). The jupyter notebooks used to configure and run the jobs on the HPC machines can be accessed from the Brain Simulation Platform of the HBP (https://collab.humanbrainproject.eu/#/collab/1655/nav/66850).

References

- 1.Lupascu CA, Morabito A, Merenda E, et al. A General Procedure to Study Subcellular Models of Transsynaptic Signaling at Inhibitory Synapses. Frontiers in Neuroinformatics 2016;10:23.

- 2.Marchionni I, Kasap Z, Mozrzymas JW, Sieghart W, Cherubini E, Zacchi P. New insights on the role of gephyrin in regulating both phasic and tonic GABAergic inhibition in rat hippocampal neurons in culture. Neuroscience 2009 164: 552–562

P33 Proprioceptive feedback effects muscle synergy recruitment during an isometric knee extension task

Hugh Osborne1, Gareth York2, Piyanee Sriya2, Marc de Kamps3, Samit Chakrabarty2

1University of Leeds, Institute for Artificial and Biological Computation, School of Computing, United Kingdomv2University of Leeds, School of Biomedical Sciences, Faculty of Biological Sciences, United Kingdom; 3University of Leeds, School of Computing, Leeds, United Kingdom

Correspondence: Hugh Osborne (sc16ho@leeds.ac.uk)

BMC Neuroscience 2019, 20(Suppl 1):P33

The muscle synergy hypothesis of motor control posits that simple common patterns of muscle behaviour are combined together to produce complex limb movements. How proprioception influences this process is not clear. EMG recordings were taken of the upper leg muscles during an isometric knee extension task (n = 17, male; 9, female; 8). The internal knee angle was held at 0°, 20°, 60° or 90°. Non-negative matrix factorisation (NMF) was performed on the EMG traces and two synergy patterns were identified accounting for over 90% of the variation across participants. The first synergy indicated the expected increase in activity across all muscles which was also visible in the raw EMG. The second synergy showed a significant difference between coefficients of the knee flexors and extensors, highlighting their agonist/antagonist relationship. As the leg was straightened, the flexor-extensor difference in the second synergy became more pronounced indicating a change in passive insufficiency of the hamstring muscles. Changing hip position and reducing the level of passive insufficiency resulted in delayed onset of the second synergy pattern. An additional observation of bias in the Rectus Femoris and Semitendinosus coefficients of the second synergy was made, perhaps indicating the biarticular behaviour of these muscles.

Having demonstrated that static proprioceptive feedback influences muscle synergy recruitment we then reproduced this pattern of activity in a neural population model. We used the MIIND neural simulation platform to build a network of populations of motor neurons and spinal interneurons with a simple Integrate and Fire neuron model. MIIND provides an intuitive system for developing such networks and simulating with an appropriate and well-defined amount of noise. The simulator can handle large, quick changes in activity with plausible postsynaptic potentials. Two mutually inhibiting populations of both excitatory and inhibitory interneurons were connected to five motor neuron populations, each with a balanced descending input. A single excitatory input to the extensor interneuron pool was used to indicate the level of afferent activity due to the static knee angle. By applying the same NMF step to the activity of the motor neuron populations, the same muscle synergies were observed, with increasing levels of afferent activity resulting in changes to agonist/antagonist recruitment. When the trend in afferent activity is taken further such that it is introduced to the flexor interneuron population, extensor synergy coefficients and vectors increase, leaving the flexor coefficients at zero. This shift from afferent feedback in the agonists to antagonists is predicted by the model but has yet to be confirmed with joint angles beyond 90 degrees.

With the introduction of excitatory connections from the flexor interneuron pool to the Rectus Femoris motor neuron population, the biarticular synergy association, which is proportional to the knee angle, was also reproduced in the model. Even with this addition, there is no need to provide a cortical bias to any individual motor neuron population. The synergies arise naturally from the connectivity of the network and afferent input. This suggests muscle synergies could be generated at the level of spinal interneurons wherein proprioceptive feedback is directly integrated into motor control.

P34 Strategies of dragonfly interception

Frances Chance

Sandia National Laboratories, Department of Cognitive and Emerging Computing, Albuquerque, NM, United States of America

Correspondence: Frances Chance (fschanc@sandia.gov)

BMC Neuroscience 2019, 20(Suppl 1):P34

Interception of a target (e.g. a human catching a ball or an animal catching prey) is a common behavior solved by many animals. However, the underlying strategies used by animals are poorly understood. For example, dragonflies are widely recognized as highly successful hunters, with reports of up to 97% success rates [1], yet a full description of their interception strategy, whether it be to head directly at its target (a strategy commonly referred to as pursuit) or instead to maintain a constant bearing-angle relative to the target (sometimes referred to as proportional or parallel navigation) still has yet to be fully developed (see [2]). While parallel navigation is the logical strategy for calculating the shortest time-to-intercept, we find that there are certain conditions (for example if the prey is capable of relatively quick maneuvers) in which parallel navigation is not the optimal strategy for success. Moreover, recent work [2] observed that dragonflies only adopt a parallel-navigation strategy for a short period of time shortly before prey-capture. We propose that alternate strategies, hybrid between pursuit and parallel navigation lead to more successful interception, and describe what constraints (e.g. prey maneuvering) determine which interception strategy is optimal for the dragonfly. Moreover, we compare dragonfly interception strategy to those that might be employed by other animals, for example other predatory insects that may not be capable of flying speeds similar to those of the dragonfly. Finally, we discuss neural circuit mechanisms by which interception strategy, as well as intercept-maneuvers, may be calculated based on prey-image slippage on the dragonfly retina.

This paper describes objective technical results and analysis. Any subjective views or opinions that might be expressed in the paper do not necessarily represent the views of the U.S. Department of Energy or the United States Government.

Acknowledgements: Sandia National Laboratories is a multimission laboratory managed and operated by National Technology & Engineering Solutions of Sandia, LLC, a wholly owned subsidiary of Honeywell International Inc., for the U.S. Department of Energy’s National Nuclear Security Administration under contract DE-NA0003525. SAND2019-2782A

References

- 1.Olberg RM, Worthington AH, Venator KR. Prey pursuit and interception in dragonflies. Journal of Comparative Physiology A 2000 Feb 1;186(2):155–62.

- 2.Mischiati M, Lin HT, Herole O, Imler E, Olberg R, Leonardo, A. Internal models direct dragonfly interception steering. Nature 2015, 517: 333–338.

P35 The bump attractor model predicts spatial working memory impairment from changes to pyramidal neurons in the aging rhesus monkey dlPFC

Sara Ibanez Solas1, Jennifer Luebke2, Christina Weaver1, Wayne Chang2

1Franklin and Marshall College, Department of Mathematics and Computer Science, Lancaster, PA, United States of America; 2Boston University School of Medicine, Department of Anatomy and Neurobiology, Boston, MA, United States of America

Correspondence: Sara Ibanez Solas (sibanezs@fandm.edu)

BMC Neuroscience 2019, 20(Suppl 1):P35

Behavioral studies have shown impairment in performance during spatial working memory (WM) tasks with aging in several animal species, including humans. Persistent activity (PA) during delay periods of spatial WM tasks is thought to be the main mechanism underlying spatial WM, since the selective firing of pyramidal neurons in the dorsolateral prefrontal cortex (dlPFC) to different spatial locations seems to encode the memory of the stimulus. This firing activity is generated by recurrent connections between layer 3 pyramidal neurons in the dlPFC, which, as many in vitro studies have shown, undergo significant structural and functional changes with aging. However, the extent to which these changes affect the neural mechanisms underlying spatial WM, and thus cognition, is not known. Here we present the first empirical evidence that spatial WM in the rhesus monkey is impaired in some middle-aged subjects, and show that spatial WM performance is negatively correlated with hyperexcitability (increased action potential firing rates) of layer 3 pyramidal neurons. We used the bump attractor network model to explore the effects on spatial WM of two age-related changes to the properties of individual pyramidal neurons: the increased excitability observed here and previously [1, 2], and a 10-30% loss of both excitatory and inhibitory synapses in middle-aged and aged monkeys [3]. In particular, we simulated the widely used (Oculomotor) Delayed Response Task (DRT) and introduced a simplified model of the Delayed Recognition Span Task-spatial condition (DRST-s) which was administered to the monkeys in this study. The DRST-s task is much more complex than the DRT, requiring simultaneous encoding of multiple stimuli which successively increase in number. Simulations predicted that PA—and in turn WM performance—in both tasks was severely impaired by the increased excitability of individual neurons, but not by the loss of synapses alone. This is consistent with the finding in [3], where no correlations were seen between synapse loss and DRST-s impairment. Simulations also showed that pyramidal neuron hyperexcitability and synapse loss might compensate each other partially: the level of impairment in the DRST-s model with these simultaneous changes was similar to that seen in the DRST-s data from young vs. aged monkeys. The models also predict an age-related reduction in total synaptic input current to pyramidal neurons alongside changes to their f-I curves, showing that the increased excitability of pyramidal neurons we have seen in vitro is consistent with lower firing rates seen during DRT testing of middle-aged and aged monkeys in vivo [4]. Finally, in addition to PA, this study suggests that short-term synaptic facilitation plays an important (if often unappreciated) role in spatial WM.

Acknowledgments: We thank National Institute of Health (National Institute on Aging) for supporting the authors with Grant Number R01AG059028.

References

- 1.Chang YM, Rosene DL, Killiany RJ, Mangiamele LA, Luebke JI. Increased action potential firing rates of layer 2/3 pyramidal cells in the prefrontal cortex are significantly related to cognitive performance in aged monkeys. Cerebral Cortex 2004 Aug 5;15(4):409–18.

- 2.Coskren PJ, Luebke JI, Kabaso D, et al. Functional consequences of age-related morphologic changes to pyramidal neurons of the rhesus monkey prefrontal cortex. Journal of computational neuroscience 2015 Apr 1;38(2):263–83.

- 3.Peters A, Sethares C, Luebke JI. Synapses are lost during aging in the primate prefrontal cortex. Neuroscience 2008 Apr 9;152(4):970–81.

- 4.Wang M, Gamo NJ, Yang Y, et al. Neuronal basis of age-related working memory decline. Nature 2011 Aug;476(7359):210.

P36 Brain dynamic functional connectivity: lesson from temporal derivatives and autocorrelations

Jeremi Ochab1, Wojciech Tarnowski1, Maciej Nowak1,2, Dante Chialvo3

1Jagiellonian University, Institute of Physics, Kraków, Poland; 2Mark Kac Complex Systems Research Center, Kraków, Poland; 3Universidad Nacional de San Martín and CONICET, Center for Complex Systems & Brain Sciences (CEMSC^3), Buenos Aires, Argentina

Correspondence: Jeremi Ochab (jeremi.ochab@uj.edu.pl)

BMC Neuroscience 2019, 20(Suppl 1):P36

The study of correlations between brain regions in functional magnetic resonance imaging (fMRI) is an important chapter of the analysis of large-scale brain spatiotemporal dynamics. The burst of research exploring momentary patterns of blood oxygen level-dependent (BOLD) coactivations, referred to as dynamic functional connectivity, has brought prospects of novel insights into brain function and dysfunction. It has been, however, closely followed by inquiries into pitfalls the new methods hold [1], and only recently by their systematic evaluation [2].

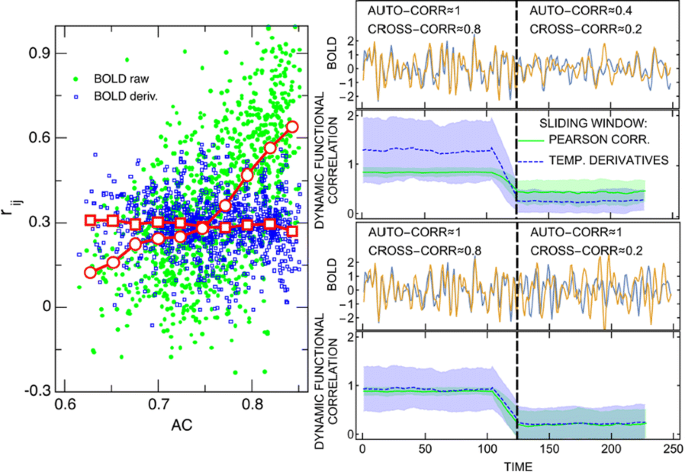

From among such recent measures, we scrutinize a metric dubbed “Multiplication of Temporal Derivatives” (MTD) [3] which is based on the temporal derivative of each time series. We compare it with the sliding window Pearson correlation of the raw time series in several stationary and non-stationary set-ups, including: simulated autoregressive models with a step change in their coupling, surrogate data [4] with realistic spectral and covariance properties and a step change in their cross- and autocovariance (see, Fig. 1, right panels), and a realistic stationary network detection (with the use of gold standard simulated data; [5]).

(Left) Cross-correlation of pairs of blood oxygen level-dependent (BOLD) signals and their derivatives versus their common auto-correlation; red markers show binned averages. (Right) a simulated step change in cross- and/or auto- correlations and the effect it has on dynamic functional correlation measures (Pearson sliding window and “multiplication of temporal derivatives”)

The formal comparison of the MTD formula with the Pearson correlation of the derivatives reveals only minor differences, which we find negligible in practice. The numerical comparison reveals lower sensitivity of derivatives to low frequency drifts and to autocorrelations but also lower signal-to-noise ratio. It does not indicate any evident mathematical advantages of the MTD metric over commonly used correlation methods.

Along the way we discover that cross-correlations between fMRI time series of brain regions are tied to their autocorrelations (see, Fig. 1, left panel). We solve simple autoregressive models to provide mathematical grounds for that behaviour. This observation is relevant to the occurrence of false positives in real networks and might be an unexpected consequence of current preprocessing techniques. This fact remains troubling, since similar autocorrelations of any two brain regions do not necessarily result from their actual structural connectivity or functional correlation.

The study has been recently published [6].

Acknowledgements: Work supported by the National Science Centre (Poland) grant DEC-2015/17/D/ST2/03492 (JKO), Polish Ministry of Science and Higher Education ”Diamond Grant” 0225/DIA/2015/44 (WT), and by CONICET (Argentina) and Escuela de Ciencia y Tecnología, UNSAM (DRC).

References

- 1.Hindriks R, Adhikari MH, Murayama Y et al. Can sliding-window correlations reveal dynamic functional connectivity in resting-state fMRI? Neuroimage 2016, 127, 242–256.

- 2.Thompson WH, Richter CG, Plavén-Sigray P, Fransson P. Simulations to benchmark time-varying connectivity methods for fMRI. PLoS Computational Biology 2018, 14, e1006196.

- 3.Shine JM, Koyejo O, Bell PT, et al. Estimation of dynamic functional connectivity using multiplication of temporal derivatives. Neuroimage 2015, 122, 399–407.

- 4.Laumann TO, Snyder AZ, Mitra A. et al. On the stability of BOLD fMRI correlations. Cereb Cortex 2017, 27, 4719–4732.

- 5.Smith SM, Miller KL, Salimi-Khorshidi G. et al. Network modelling methods for FMRI. Neuroimage 2011, 54, 875–891.

- 6.Ochab JK, Tarnowski W, Nowak MA, Chialvo DR. On the pros and cons of using temporal derivatives to assess brain functional connectivity. Neuroimage 2019, 184, 577–585.

P37 nigeLab: a fully featured open source neurophysiological data analysis toolbox

Federico Barban1, Maxwell D. Murphy2, Stefano Buccelli1, Michela Chiappalone1

1Fondazione Istituto Italiano di Tecnologia, Rehab Technologies, IIT-INAIL Lab, Genova, Italy; 2University of Kansas Medical Center, Department of Physical Medicine and Rehabilitation, Kansas City, United States of America

Correspondence: Federico Barban (federico.barban@iit.it)

BMC Neuroscience 2019, 20(Suppl 1):P37

The rapid advance in neuroscience research and the related technological improvements have led to an exponential increase in the ability to collect high-density neurophysiological signals from extracellular field potentials generated by neurons. While the specific processing of these signals is dependent upon the nature of the system under consideration, many studies seek to relate these signals to behavioral or sensory stimuli and typically follow a similar workflow. In this context we felt the need for a tool that facilitates tracking and organizing data across experiments and experimental groups during the processing steps. Moreover, we sought to unify different resources into a single hub that could offer standardization and interoperability between different platforms, boosting productivity and fostering the open exchange of experimental data between collaborating groups.

To achieve this, we built an end-to-end signal analysis package based on MATLAB, with a strong focus on collaboration, organization and data sharing . Inspired by the FAIR data policy [1], we propose a hierarchical data organization with copious amount of metadata, to help keep everything organized, easily shareable and traceable. The result is the neuroscience integrated general electrophysiology lab, or nigeLab, a unified package for tracking and analyzing electrophysiological and behavioral endpoints in neuroscientific experiments. The pipeline has a lot to offer: data extraction to a standard hierarchical format, filtering algorithms with local field potential (LFP) extraction, spike detection and spike sorting, point process analysis, frequency content analysis, graph theory and connectivity analysis both in the spike domain and in the LFP as well as many data visualizations tools and interfaces.

The source code is freely available and developed to be easily expandable and adaptable to different setups and paradigms. Importantly, nigeLab focuses on ease-of-use through an intuitive interface. We aimed to design an easily deployable toolkit for scientists with a non-technical background, while still offering powerful tools for electrophysiological pre-processing, analysis, and metadata tracking. The whole pipeline is lightweight and optimized to be scalable and parallelizable and can be run on a laptop as well as on a cluster.

Reference

- 1.European Commission. Guidelines on FAIR Data Management in Horizon 2020.

P38 Neural ensemble circuits with adaptive resonance frequency

Alejandro Tabas1, Shih-Cheng Chien2

1Max Planck Institute for Human Cognitive and Brain Sciences, Research Group in Neural Mechanisms of Human Communication, Leipzig, Germany; 2Max Planck Institute for Human Cognitive and Brain Sciences, Leipzig, Germany

Correspondence: Alejandro Tabas (alextabas@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):P38

Frequency modulation is a ubiquitous phenomenon in sensory processing and cortical communication. Although multiple neural mechanisms are known to operate at cortical and subcortical levels of the auditory hierarchy to encode fast FM-modulation, the neural encoding of low-rate FM-modulation are still poorly understood. In this work, we introduce a potential neural mechanism for low-rate FM selectivity based on a simplified model of a cortical microcolumn following Wilson-Cowan dynamics.

Previous studies have used Wilson-Cowan microcircuits with one excitatory and one inhibitory population to build a system responding selectively to certain rhythms [1]. The excitatory ensemble is connected to the circuit’s input, that usually consists of a sinusoid or a similarly periodic input. The system incorporates synaptic depression through adaptation variables that reduce the effective connectivity weights between the neural populations [2]. By carefully tuning the system parameters, May and Tiitinen showed that this system shows resonant behaviour to a narrow range of frequencies of the oscillatory input, effectively acting as a periodicity detector [1].

Here, we first provide for an approximate analytical expression of the resonance frequency of the system with the system parameters. First, we subdivide the Wilson-Cowan dynamics in two dynamical systems operating at two different temporal scales: the fast system, that operates at the timescale of the cell membrane time constants (tau ~ 10-20ms [3]), and the slow system, that operates at the timescale of the adaptation time constant (tau = 500ms [2]). In the timescale of the fast system, the adaptation dynamics are quasistatic and the connectivity weights can be regarded as locally constant. Under these conditions, we show that the Wilson-Cowan microcircuit behaves as a driven damped harmonic oscillator whose damping factor and resonant frequency depend on the connectivity weights between the populations. We validate the analytical predictions with numerical simulations of the non-approximated system with different sinusoidal inputs and show that our analytical predictions explain the previous results from May and Tiitinen [1].

In the timescale of the slow system, fast oscillations in the firing rate of the excitatory and inhibitory populations are smoothed down by the effective low-pass filtering exerted by the much slower adaptation dynamics. Under these conditions, the connectivity weights decay slowly at a constant rate that depends on the average firing rates of the neural populations and the adaptation strengths. However, since the nominal resonance frequency depends on the connectivity weights, the decay of the latter results in a modulation of the former. We exploit this property to build a series of architectures that potentially show direction selectivity to rising or falling frequency modulated sinusoids. Our analytical predictions are validated by numerical simulations of the non-approximated system, driven by frequency modulated sinusoidal inputs.

References

- 1.May P, Tiitinen H. Human cortical processing of auditory events over time. NeuroReport 2001 Mar 5;12(3):573–7.

- 2.May P, Tiitinen H. Temporal binding of sound emerges out of anatomical structure and synaptic dynamics of auditory cortex. Frontiers in computational neuroscience 2013 Nov 7;7:152.

- 3.McCormick DA, Connors BW, Lighthall JW, Prince DA. Comparative electrophysiology of pyramidal and sparsely spiny stellate neurons of the neocortex. Journal of neurophysiology 1985 Oct 1;54(4):782–806.

P39 Large-scale cortical modes reorganize between infant sleep states and predict preterm development

James Roberts1, Anton Tokariev2, Andrew Zalesky3, Xuelong Zhao4, Sampsa Vanhatalo2, Michael Breakspear5, Luca Cocchi6

1QIMR Berghofer Medical Research Institute, Brain Modelling Group, Brisbane, Australia; 2University of Helsinki, Department of Clinical Neurophysiology, Helsinki, Finland; 3University of Melbourne, Melbourne Neuropsychiatry Centre, Melbourne, Australia; 4University of Pennsylvania, Department of Neuroscience, Philadelphia, United States of America; 5QIMR Berghofer Medical Research Institute, Systems Neuroscience Group, Brisbane, Australia; 6QIMR Berghofer Medical Research Institute, Clinical Brain Networks Group, Brisbane, Australia

Correspondence: James Roberts (james.roberts@qimrberghofer.edu.au)

BMC Neuroscience 2019, 20(Suppl 1):P39

Sleep architecture carries important information about brain health but mechanisms at the cortical scale remain incompletely understood. This is particularly so in infants, where there are two main sleep states: active sleep and quiet sleep, precursors to the adult REM and NREM. Here we show that active compared to quiet sleep in infants heralds a marked change from long- to short-range functional connectivity across broad-frequency neural activity. This change in cortical connectivity is attenuated following preterm birth and predicts visual performance at two years. Using eigenmodes of brain activity [1] derived from neural field theory [2], we show that active sleep primarily exhibits reduced energy in a large-scale, uniform mode of neural activity and slightly increased energy in two non-uniform anteroposterior modes. This energy redistribution leads to the emergence of more complex connectivity patterns in active sleep compared to quiet sleep. Preterm-born infants show an attenuation in this sleep-related reorganization of connectivity that carries novel prognostic information. We thus provide a mechanism for the observed changes in functional connectivity between sleep states, with potential clinical relevance.

Acknowledgments: A.T. was supported by Finnish Cultural Foundation (Suomen Kulttuurirahasto; 00161034). A.T. and S.V. were also funded by Academy of Finland (276523 and 288220) and Sigrid Jusélius Foundation (Sigrid Juséliuksen Säätiö), as well as Finnish Pediatric Foundation (Lastentautien tutkimussäätiö). J.R., A.Z., M.B., and L.C. are supported by the Australian National Health Medical Research Council (J.R. 1144936 and 1145168, A.Z. 1047648, M.B. 1037196, L.C. 1099082 and 1138711). This work was also supported by the Rebecca L. Cooper Foundation (J.R., PG2018109) and the Australian Research Council Centre of Excellence for Integrative Brain Function (M.B., CE140100007).

References

- 1.Atasoy S, Donnelly I, Pearson J. Human brain networks function in connectome-specific harmonic waves. Nature communications 2016 Jan 21;7:10340.

- 2.Robinson PA, Zhao X, Aquino KM, Griffiths JD, Sarkar S, Mehta-Pandejee G. Eigenmodes of brain activity: Neural field theory predictions and comparison with experiment. NeuroImage 2016 Nov 15;142:79–98.

P40 Reliable information processing through self-organizing synfire chains

Thomas Ilett, David Hogg, Netta Cohen

University of Leeds, School of Computing, Leeds, United Kingdom

Correspondence: Thomas Ilett (tomilett@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):P40

Reliable information processing in the brain requires precise transmission of signals across large neuron populations that is reproducible and stable over time. Exactly how this is achieved remains an open question but a large body of experimental data has pointed to the importance of synchronised firing patterns of cell assemblies in mediating precise sequential patterns of activity. Synfire chains provide an appealing theoretical framework to account for reliable transmission of information through a network, with potential for robustness to noise and synaptic degradation. Here, we use self-assembled synfire chain models to test the interplay between encoding capacity, robustness to noise and flexibility to learning new patterns. We first model synfire chain development as a self-assembly process from a randomly connected network of leaky integrate-and-fire (LIF) neurons subject to a variant of the spike-timing-dependent plasticity (STDP) learning rule (adapted from [1]). We show conditions for these networks to form chains (in some conditions even without external input) and characterise the encoding capacity of the network by presenting different input patterns that result in distinguishable chains of activation. We show that these networks develop different, often overlapping chains in response to different inputs. We further demonstrate the importance of inhibition for the long-term stability of the chains and test the robustness of our network to various degrees of neuronal and synaptic death. Finally, we explore the ability for the network to increase its encoding capacity by dynamically learning new inputs.

Reference

- 1.Waddington A, Appleby PA, De Kamps M, Cohen N. Triphasic spike-timing-dependent plasticity organizes networks to produce robust sequences of neural activity. Frontiers in Computational Neuroscience 2012 Nov 12;6:88.

P41 Acetylcholine regulates redistribution of synaptic efficacy in neocortical microcircuitry

Cristina Colangelo

Blue Brain Project (BBP), Brain Mind Institute, EPFL, Lausanne, Switzerland, geneva, Switzerland

Correspondence: Cristina Colangelo (cristina.colangelo@epfl.ch)

BMC Neuroscience 2019, 20(Suppl 1):P41

Acetylcholine is one of the most widely characterized neuromodulatory systems involved in the regulation of cortical activity. Cholinergic release from the basal forebrain controls neocortical network activity and shapes behavioral states such as learning and memory. However, a precise understanding of how acetylcholine regulates local cellular physiology and synaptic transmission that reconfigure global brain states remains poorly understood. To fill this knowledge gap, we analyzed whole-cell patch-clamp recordings from connected pairs of neocortical neurons to investigate how acetylcholine release modulates membrane properties and synaptic transmission. We found that bath-application of 10 µM carbachol differentially redistributes the available synaptic efficacy and the short-term dynamics of excitatory and inhibitory connections. We propose that redistribution of synaptic efficacy by acetylcholine is a potential means to alter content, rather than the gain of information transfer of synaptic connections between specific cell-types types in the neocortex. Additionally, we provide a dataset that can serve as reference to build data-driven computational models on the role of ACh in governing brain states.

P42 NeuroGym: A framework for training any model on more than 50 neuroscience paradigms

Manuel Molano-Mazon1, Guangyu Robert Yang2, Christopher Cueva2, Jaime de la Rocha1, Albert Compte3

1IDIBAPS, Theoretical Neurobiology, Barcelona, Spain; 2Columbia University, Center for Theoretical Neuroscience, New York, United States of America; 3IDIBAPS, Systems Neuroscience, Barcelona, Spain

Correspondence: Manuel Molano-Mazon (molano@clinic.cat)

BMC Neuroscience 2019, 20(Suppl 1):P42

It is becoming increasingly popular in systems neuroscience to train Artificial Neural Networks (ANNs) to investigate the neural mechanisms that allow animals to display complex behavior. Important aspects of brain function such as perception or working memory [2, 4] have been investigated using this approach, which has yielded new hypotheses about the computational strategies used by brain circuits to solve different behavioral tasks.

While ANNs are usually tuned for a small set of closely related tasks, the ultimate goal when training neural networks must be to find a model that can explain a wide range of experimental results collected across many different tasks. A necessary step towards that goal is to develop a large, standardized set of neuroscience tasks on which different models can be trained. Indeed, there is a large body of experimental work that hinges on a number of canonical behavioral tasks that have become a reference in the field (e.g. [2,4]) and that makes it possible to develop a general framework encompassing many relevant tasks on which neural networks can be trained.

Here we propose a comprehensive toolkit, NeuroGym, that allows training any network model on many established neuroscience tasks using Reinforcement Learning techniques. NeuroGym currently contains more than ten classical behavioral tasks including, working memory tasks (e.g. [4]), value-based decision tasks (e.g. [3]) and context-dependent perceptual categorization tasks (e.g. [2]). In providing this toolbox our aim is twofold: (1) to facilitate the evaluation of any network model on many tasks and thus evaluate its capacity to generalize to and explain different experimental datasets; (2) to standardize the way computational neuroscientists implement behavioral tasks, in order to promote benchmarking and replication.

Inheriting all functionalities from the machine learning toolkit Gym (OpenAI), NeuroGym allows a wide range of well-established machine learning algorithms to be easily trained on behavioral paradigms relevant for the neuroscience community. NeuroGym also incorporates several properties and functions (e.g. realistic time step or separation of training into trials) that are specific to the protocols used in neuroscience.

Furthermore, the toolkit includes various modifier functions that greatly expand the space of available tasks. For instance, users can introduce trial-to-trial correlations onto any task [1]. Also, tasks can be combined so as to test the capacity of a given model to perform two tasks simultaneously (e.g. to study interference between two tasks [5]).

In summary, NeuroGym constitutes an easy-to-use toolkit that considerably facilitates the evaluation of a network model that has been tuned for a particular task on more than 50 tasks with no additional work, and proposes a framework to which computational neuroscience practitioners can contribute by adding tasks of their interest, using a straightforward template.

Acknowledgments: The Spanish Ministry of Science, Innovation and Universities, the European Regional Development Fund (grant BFU2015-65315-R), by Generalitat de Catalunya (grants 2017 SGR 1565 and 2017-BP-00305) and the European Research Council (ERC-2015-CoG–683209_PRIORS).

References

- 1.Hermoso-Mendizabal A, Hyafil A, Rueda-Orozco PE, Jaramillo S, Robbe D, de la Rocha J. Response outcomes gate the impact of expectations on perceptual decisions. bioRxiv 2019 Jan 1:433409.

- 2.Mante V, Sussillo D, Shenoy KV, Newsome WT. Context-dependent computation by recurrent dynamics in prefrontal cortex. Nature 2013 Nov;503(7474):78.

- 3.Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature 2006 May;441(7090):223.

- 4.Romo R, Brody CD, Hernández A, Lemus L. Neuronal correlates of parametric working memory in the prefrontal cortex. Nature 1999 Jun;399(6735):470.

- 5.Zhang X, Yan W, Wang W, et al. Active information maintenance in working memory by a sensory cortex. bioRxiv 2018 Jan 1:385393.

No hay comentarios:

Publicar un comentario