P20 Oscillations in working memory and neural binding: a mechanism for multiple memories and their interactions

Jason Pina1, G. Bard Ermentrout2, Mark Bodner3

1York University, Physicsand Astronomy, Toronto, Canada; 2University of Pittsburgh, Department of Mathematics, Pittsburgh, PA, United States of America; 3Mind Research Institute, Irvine, United States of America

Correspondence: Jason Pina (jay.e.pina@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):P20

Working memory is a form of short term memory that seems to be limited in capacity to 3–5 items. It is well known that neurons increase their firing rates from a low baseline state while information is being retained during working memory tasks. However, there is evidence of oscillatory firing rates in the active states, both in individual and in aggregate (for example, LFP and EEG) dynamics. Additionally, each memory may be composed of several different items, such as shape, color, and location. The neural correlate of the association of several items, or neural binding, is not well understood, but may be the synchronous firing of populations of neurons. Thus, the phase information of such oscillatory ensemble activity is a natural candidate to distinguish between bound (synchronous oscillations) and distinct (out-of-phase oscillations) items held actively in working memory.

Here, we explore a population firing rate model that exhibits bistability between a low baseline firing rate and a high, oscillatory firing rate. Coupling several of these populations together to form a firing rate network allows for competitive oscillatory dynamics, whereby different populations may be pairwise synchronous or out-of-phase, corresponding to bound or distinct items in memory, respectively. We find that up to 3 populations may oscillate out-of-phase with plausible modelconnectivitiesand parameter values, a result that is consistent with working memory capacity. The formulation of the model allows us to better examine from a dynamical systems perspective how these states arise as bifurcations of steady states and periodic orbits. In particular, we look at the ranges of coupling strengths and synaptic time scales that allow for synchronous and out-of-phase attracting states. We also explore how varying patterns of selective stimuli can produce and switch between rich sets of dynamics that may be relevant to working memory states and their transitions.

P21 DeNSE: modeling neuronal morphology and network structure in silico

Tanguy Fardet1, Alessio Quaresima2, Samuel Bottani2

1University of Tübingen, Computer Science Department - Max Planck Institute for Biological Cybernetics, Tübingen, Germany; 2Université Paris Diderot, Laboratoire Matière et Systèmes Complexes, Paris, France

Correspondence: Tanguy Fardet (tanguy.fardet@tuebingen.mpg.de)

BMC Neuroscience 2019, 20(Suppl 1):P21

Neural systems develop and self-organize into complex networks which can generate stimulus-specific responses. Neurons grow into various morphologies, which influences their activity and the structure of the resulting network. Different network topologies can then display very different behaviors, which suggests that neuronal structure and network connectivity strongly influence the set of functions that can be sustained by a set of neurons. To investigate this, I developed a new simulation platform, DeNSE, aimed at studying the morphogenesis of neurons and networks, and enabling to test how interactions between neurons and their surroundings can shape the emergence of specific properties.

The goal of this new simulator is to serve as a general framework to study the dynamics of neuronal morphogenesis, providing predictive tools to investigate how neuronal structures emerge in complex spatial environments. The software generalizes models present in previous simulators [1, 2], gives access to new mechanisms, and accounts for spatial constraints and neuron-neuron interactions. It has been primarily applied on two main lines of research: a) neuronal cultures or devices, their structures being still poorly defined and strongly influenced by interactions or spatial constraints [3], b) morphological determinants of neuronal disorders, analyzing how changes at the cellular scale affect the properties of the whole network [4].

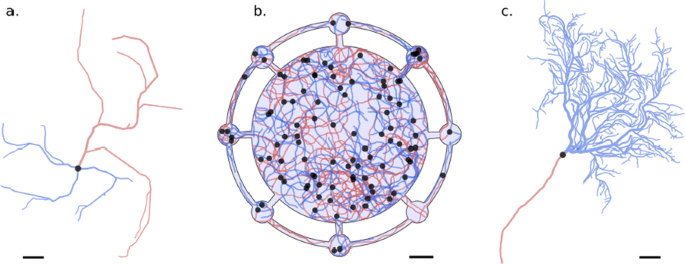

I illustrate how DeNSE enables to investigate neuronal morphology at different scales, from single cell to network level, notably through cell-cell and cell-surroundings interactions (Fig. 1). At the cellular level, I show how branching mechanisms affect neuronal morphology, introducing new models to account for interstitial branching and the influence of the environment. At intermediate levels, I show how DeNSE can reproduce interactions between neurites and how these contribute to the final morphology and introduce correlations in the network structure. At the network level, I stress how networks obtained through a growth process differ from both simple generative models and more complex network models where the connectivity comes from overlaps of real cell morphologies. Eventually, I demonstrate how DeNSE can provide biologically relevant structures to study spatio-temporal activity patterns in neuronal cultures and devices. In these structures, where the morphologies of the neurons and the network are not well defined but have been shown to play a significant role, DeNSE successfully reproduces experimental setups, predicts the influence of spatial constraints, and enables to predict their electrical activities. Such a tool can therefore be extremely useful to test structures and hypotheses prior to actual experiments, thus saving time and resources.

Structures generated with DeNSE; axons are in red, dendrites in blue, and cell bodies in black; scale bars are 50 microns. a Multipolar cell. b Neuronal growth in a structured neuronal device (light blue background) with a central chamber and small peripheric chambers; interactions between neurites can be seen notably through the presence of some fasciculated axon bundles. c Purkinje cell

References

- 1.Koene, R et al. NETMORPH: a framework for the stochastic generation of large-scale neuronal networks with realistic neuron morphologies. Neuroinformatics 2009, 7(3), 195–210

- 2.Torben-Nielsen, B et al. Context-aware modeling of neuronal morphologies. Frontiers in Neuroanatomy 2014, 8(92)

- 3.Renault, R et al. Asymmetric axonal edge guidance: a new paradigm for building oriented neuronal networks. Lab Chip 2016, 16(12), 2188–2191

- 4.Milatovic D et al. Morphometric Analysis in Neurodegenerative Disorder. Current Protocols in Toxicology 2010, Feb 1;43(1):12–6.

P22 Sponge astrocyte model: volume effects in a 2D model space simplification

Darya Verveyko1, Andrey Verisokin1, Dmitry Postnov2, Alexey R. Brazhe3

1Kursk State University, Department of Theoretical Physics, Kursk, Russia; 2Saratov State University, Institute for Physics, Saratov, Russia; 3Lomonosov Moscow State University, Department of Biophysics, Moscow, Russia

Correspondence: Darya Verveyko (allegroform@mail.ru)

BMC Neuroscience 2019, 20(Suppl 1):P22

Calcium signaling in astrocytes is crucial for the nervous system function. Earlier we proposed a 2Dastrocytemodelof calcium waves dynamics [1], where waves were driven by local stochastic surges of glutamate which simulated the synaptic activity. The main idea of the model was in reproducing the spatially segregated mechanisms, belonging to regions with different dynamics: (i) the core with calcium exchange mainly with endoplasmic reticulum (ER) and (ii) peripheral compartment with currents through a plasma membrane (PM) with dominating Ca dynamics.

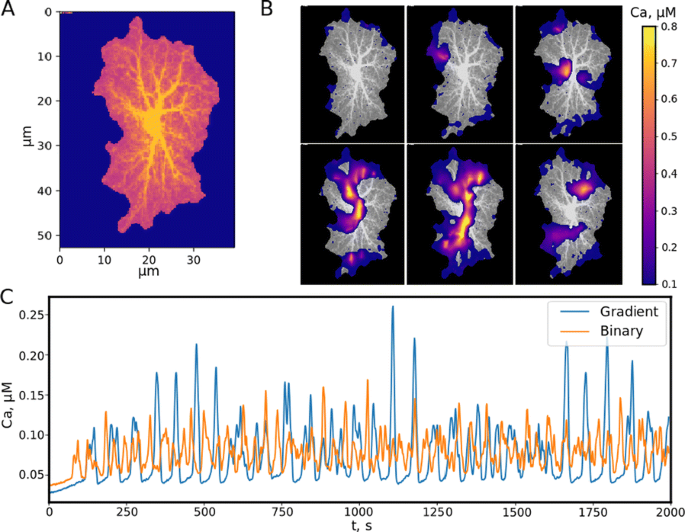

Real astrocytes are obviously not binary. There is a graded transition from thick branches to branchlets and to leaflets, primarily determined via the surface-to-volume ratio (SVR). Moreover, leaflet regions of the template contain not only astrocyte itself, but also the neuropil. We encode the astrocyte structural features by means of its color representation. Let the black color corresponds to astrocyte-free region, and the blue channel color indicate the presence of an astrocyte. Instead of binary leaflet-branch segregation, we introduce the astrocyte volume fraction (AVF) parameter, which indicates how much of the 2D cell volume is occupied by the astrocyte in real 3D effigy (the rest part is neuropil). AVF is encoded by the red channel intensity (Fig. 1A). The soma and thick branches region contain only the astrocyte (AVF = 1). The non-astro content increases from the soma to edges of an astrocyte through the leaflets, so AVF parameter should decrease and the red channel tends to its minimum value equal to 0.1 on the astrocyte border. To describe the relative effect of the exchange through PM and ER, we introduce the SVR parameter, which depends on AVF as a reverse sigmoid form. The SVR value is maximal at the edges of the leaflets and minimal in the soma.

a AVR representation of 2D image template obtained as maximum intensity projection of experimentally 3D astrocyte image, numbers from 1 to 6 indicate regions of interest (ROI). b Calcium waves in a local astrocyte. c The average calcium concentration in the model with binary geometry (red line) and in the proposed model (blue line)

The implementation of AVF and SVR effects is based on the following reasoning: larger AVF (correspondingly, smaller SVR) reflects Ca dynamics dominated by ER exchange (IP3R-mediated) and less input from PM mechanisms (IP3 synthesis and PM-mediated Ca currents). Larger SVR in turn reflects underlying tortuosity of the astrocyte cytoplasm volume by attenuating apparent diffusion coefficients for IP3 and Ca. Finally, small concentration changes in areas with high AVF will cause larger changes in concentration in the neighboring areas with low AVF due to unequal volumes taken up by astrocytic cytoplasm.

Simulations of the proposed model show the formation of calcium waves (Fig. 1B), which propagate throughout the astrocyte template from the borders towards the center. In contrast to the previous binary segmentation model, calcium elevation response in the proposed biophysically more realistic sponge model is greater, i.e. the intensity of the formed waves is higher, but the basal calcium level is lower (Fig. 1C). At the same time, the threshold of stable wave existence grows because increasing AVF works like a blocking barrier for a small glutamate release reducing the number of wave sources. Nevertheless, large enough glutamate release leads to a wide-area wave quickly occupying the leaflets moving to the astrocyte soma.

Acknowledgements: This work is supported by the RFBR grant 17-00-00407.

Reference

- 1.Verveyko DV, et al. Raindrops of synaptic noise on dual excitability landscape: an approach to astrocyte network modelling. Proceedings SPIE 2018, 10717, 107171S.

P23 Sodium-calcium exchangers modulate excitability of spatially distributed astrocyte networks

Andrey Verisokin1, Darya Verveyko1, Dmitry Postnov2, Alexey R. Brazhe3

1Kursk State University, Department of Theoretical Physics, Kursk, Russia; 2Saratov State University, Institute for Physics, Saratov, Russia; 3Lomonosov Moscow State University, Department of Biophysics, Moscow, Russia

Correspondence: Andrey Verisokin (ffalconn@mail.ru)

BMC Neuroscience 2019, 20(Suppl 1):P23

Previously we proposed two models of astrocytic calcium dynamics modulated by local synaptic activity. The first one [1] is based on the inositol trisphosphate-dependent exchange with the intracellular calcium storage taking into account specific topological features, namely different properties of thick branches and soma with thin branches. The second local model for a separate segment of an astrocyte [2] considers the sodium-calcium exchanger (NCX) and Na+ response to the synaptic glutamate. In this work we combine these two models and proceed to a spatially distributed astrocyte network. Our main goal is to study the process of the cytoplasmic calcium wave initiation and its motion through the astrocyte network.

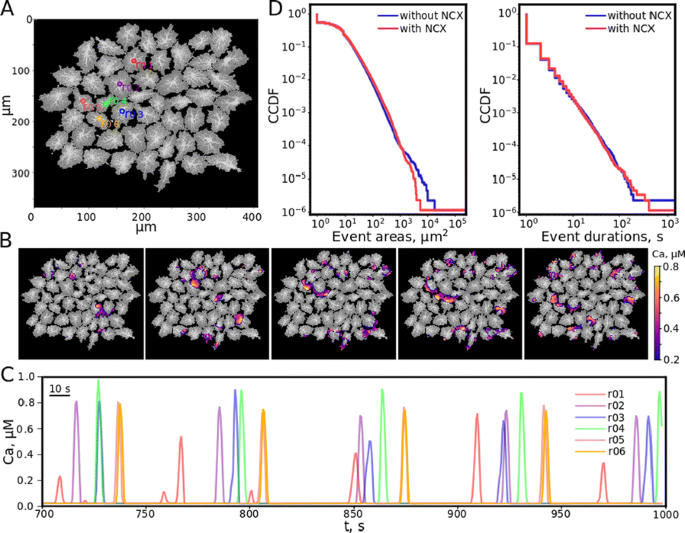

The astrocyte cell is represented in the model by a 2D projection of a real cell microphotograph and indicated by a blue colour. The intensity of a red channel in each pixel shows the cytoplasm/ neuropil volume ratio. We introduce this volume characteristic to describe the differences in diffusion rates and the contribution of ion currents through endoplasmic reticulum membrane and plasma membrane in soma, branches and leaflets. We further connect various astrocyte cell templates into a network (Fig. 1A).

a Astrocyte network simulation template, the numbered circles indicate some regions of interest (ROI). b The example of spreading calcium wave. c Calcium dynamics in ROIs illustrates the quasi-pacemaker behavior. d CCDF for areas and durations of calcium excitation for the models with and without (blue and red lines correspondingly) NCX regulation

The proposed mathematical model includes 7 variables: calcium concentrations in cytosol andendoplasmic reticulum, inositol trisphosphate and sodium concentrations incytosol, extracellular glutamate concentration, inositol trisphosphatereceptor and NCX inactivation gating variables h and g. Synaptic glutamate activity is described by a quantal release triggered by a spike train drawn from a homogeneous Poisson process. A detailed description of the model equations and parameters including its biophysical meaning is provided in [1, 2].

The results of the unified model numerical solution confirm the emergence of calcium waves, which occur due to the synaptic activity and spread over the astrocyte network (Fig. 1B). Depending on the excitation level and the network topology, the combination of two possible scenarios is forming: calcium excitation wave captures the entire astrocyte network, along with local waves, which exist only within one cell and terminate beyond its borders. The first scenario includes the regime when one of the cells acts as a pacemaker, i.e. the source of periodic calcium waves (Fig. 1C). The statistics on area and duration of calcium excitation events in the case of the presence and absence of NCX regulations was obtained using complementary cumulative distribution functions (CCDF). The presence of NCX leads to a decrease in the average areas that are affected by a global calcium wave during excitation, while the number of events with equal duration time is the same on average for both models (Fig. 1D). However, the Na/Ca-exchanger stimulates calcium waves, making possible the formation of more long-lived waves.

Acknowledgements: This study was supported by Russian Science Foundation, grant 17-74-20089.

References

- 1.Verveyko DV, et al. Raindrops of synaptic noise on dual excitability landscape: an approach to astrocyte network modelling. Proceedings SPIE 2018, 10717, 107171S.

- 2.Brazhe AR, et al. Sodium–calcium exchanger can account for regenerative Ca2+ entry in thin astrocyte processes. Frontiers in Cellular Neuroscience 2018, 12, 250.

P24 Building a computational model of aging in visual cortex

Seth Talyansky1, Braden Brinkman2

1Catlin Gabel School, Portland, OR, United States of America; 2Stony Brook University, Department of Neurobiology and Behavior, Stony Brook, NY, United States of America

Correspondence: Seth Talyansky (talyanskys@catlin.edu)

BMC Neuroscience 2019, 20(Suppl 1):P24

The mammalian visual system has been the focus of countless experimental and theoretical studies designed to elucidate principles of sensory coding. Most theoretical work has focused on networks intended to reflect developing or mature neural circuitry, in both health and disease. Few computational studies have attempted to model changes that occur in neural circuitry as an organism ages non-pathologically. In this work we begin to close this gap, studying how physiological changes correlated with advanced age impact the computational performance of a spiking network model of primary visual cortex (V1).

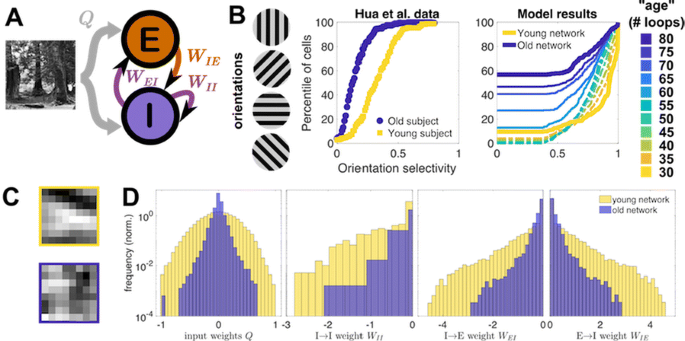

Senescent brain tissue has been found to show increased excitability [1], decreased GABAergic inhibition [2], and decreased selectivity to the orientation of grating stimuli [1]. While the underlying processes driving these changes with age are far from clear, we find that these observations can be replicated by a straightforward, biologically-interpretable modification to a spiking network model of V1 trained on natural image inputs using local synaptic plasticity rules [3]. Specifically, if we assume the homeostatically-maintained excitatory firing rate increases with “age” (duration of training), a corresponding decrease in network inhibition follows naturally due to the synaptic plasticity rules that shape network architecture during training. The resulting aged network also exhibits a loss in orientation selectivity (Fig. 1).

a Model schematic (see [3]). b Cumulative distribution of experimental [1] and model orientation selectivities. Model “ages” correspond to training loops as target firing increases. Thin dashed (solid) lines correspond to early (late) stages of aging. c An example neuron’s young (top) vs. old (bottom) receptive field. d Young vs. old distributions of input and lateral weights

In addition to qualitatively replicating previously observed changes, our trained model allows us to probe how the network properties evolve during aging. For example, we statistically characterize how the receptive fields of model neurons change with age: we find that 31% of young model neuron receptive fields are well-characterized as Gabor-like; this drops to 6.5% in the aged network. Only 1.5% of neurons were Gabor-like in both youth and old age, while 5% of neurons that were not classified as Gabor-like in youth were in old age. As one might intuit, these changes are tied to the decrease in orientation selectivity: by remapping the distribution of strengths of the young receptive fields to match the strength distribution of the old receptive fields, while otherwise maintaining the receptive field structure, we can show that orientation selectivity is improved at every age.

Our results demonstrate that deterioration of homeostatic regulation of excitatory firing, coupled with long-term synaptic plasticity, is a sufficient mechanism to reproduce features of observed biogerontological data, specifically declines in selectivity and inhibition. This suggests a potential causality between dysregulation of neuron firing and age-induced changes in brain physiology and performance. While this does not rule out deeper underlying causes or other mechanisms that could give rise to these changes, our approach opens new avenues for exploring these underlying mechanisms in greater depth and making predictions for future experiments.

References

- 1.Hua, Li, He, et al. Functional degradation of visual cortical cells in old cats. Neurobiol. Aging 2006, 27, 155–162.

- 2.Hua, Kao, Sun, et al. Decreased proportion of GABA neurons accompanies age-related degradation of neuronal function in cat striate cortex. Brain Research Bulletin 2008, 75, 119–125.

- 3.King, Zylberberg, DeWeese. Inhibitory Interneurons Decorrelate Excitatory Cells to Drive Sparse Code Formation in a Spiking Model of V1. Journal of Neuroscience 2013, 33, 5475–5485.

P25 Toward a non-perturbative renormalization group analysis of the statistical dynamics of spiking neural populations

Braden Brinkman

Stony Brook University, Department of Neurobiology and Behavior, Stony Brook, NY, United States of America

Correspondence: Braden Brinkman (braden.brinkman@stonybrook.edu)

BMC Neuroscience 2019, 20(Suppl 1):P25

Understanding how the brain processes sensory input and performs computations necessarily demands we understand the collective behavior of networks of neurons. The tools of statistical physics are well-suited to this task, but neural populations present several challenges: neurons are organized in a complicated web of connections–rather than crystalline arrangements statistical physics tools were developed for, neural dynamics are often far from equilibrium, and neurons communicate not by gradual changes in their membrane potential but by all-or-nothing spikes. These all-or-nothing spike dynamics render it difficult to treat neuronal network models using field theoretic techniques, though recently Ocker et al. [1] formulated such a representation for a stochastic spiking model and derived diagrammatic rules to calculate perturbative corrections to the mean field approximation. In this work we use an alternate representation of this model that is amenable to the methods of the non-perturbative renormalization group (NPRG), which has successfully elucidated the different phases of collective behavior in several non-equilibrium models in statistical physics. In particular, we use the NPRG to calculate how stochastic fluctuations modify the nonlinear transfer function of the network, which determines the mean neural firing rates as a function of input, and how these changes depend on network structure. Specifically, the mean field approximation of the neural firing rates r receiving current input I and synaptic connections J is r = f(I+J ∗ r), where f(x) is the nonlinear firing rate of a neuron conditioned on its input x. We show exactly that the true mean, accounting for statistical fluctuations, follows the same form of equation, r = U(I+J ∗ r), where U(x) is an effective nonlinearity to be calculated using NPRG approximation methods.

Reference

- 1.Ocker G, Josić K, Shea-Brown E, Buice M. Linking Structure and Activity in Nonlinear Spiking Networks. PLoS Comput Biology 2017, 13(6): e1005583.

P26 Sensorimotor strategies and neuronal representations of whisker-based object recognition in mice barrel cortex

Ramon Nogueira1, Chris Rodgers1, Stefano Fusi2, Randy Bruno1

1Columbia University, Center for Theoretical Neuroscience, New York, NY, United States of America; 2Columbia University, Zuckerman Mind Brain Behavior Institute, New York, United States of America

Correspondence: Ramon Nogueira (rnogueiramanas@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):P26

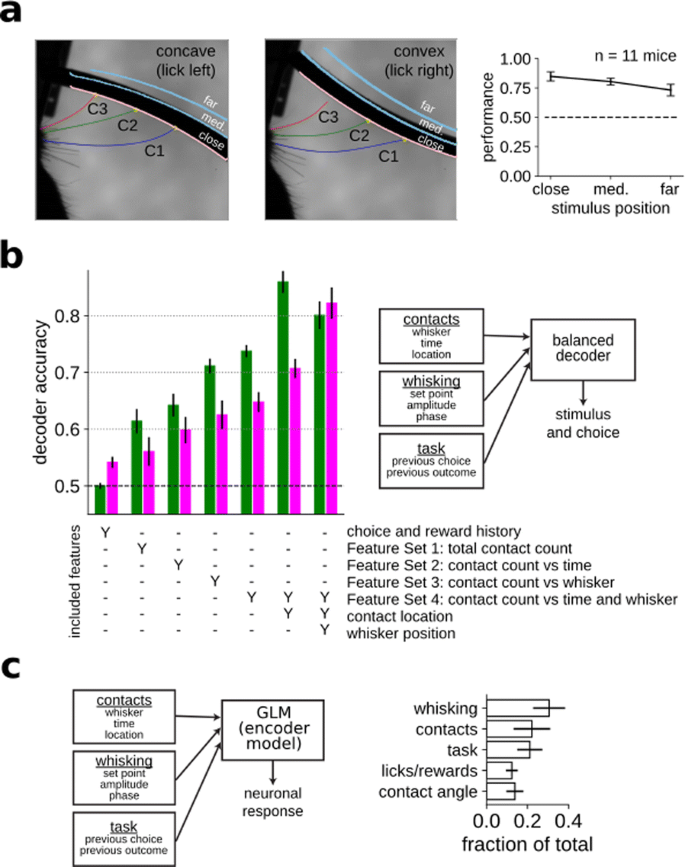

Humans and other animals can identify objects by active touch—coordinated exploratory motion and tactile sensation. Rodents, and in particular mice, scan objects by active whisking, which allows them to form an internal representation of the physical properties of the object. In order to elucidate the behavioral and neural mechanisms underlying this ability, we developed a novel curvature discrimination task for head-fixed mice that challenges the mice to discriminate concave from convex shapes (Fig. 1a). On each trial, a curved shape was presented into the range of the mouse’s whiskers and they were asked to lick left for concave and right for convex shapes. Whisking and contacts were monitored with high-speed video. Mice learned the task well and their performance plateaued at 75.7% correct on average (chance 50% correct).

a Mice were trained to perform a curvature discrimination task. The identity and position of each whisker was monitored with high-speed video. b By increasing the complexity of the regressors used to predict stimulus and choice, we identified the most informative features and the features driving behavior. c Neurons in the barrel cortex encode a myriad of sensory and task related variables

Because most previous work has relied on mice detecting the presence or location of a simple object with a single whisker, it is a priori unclear what sensorimotor features are important for more complex tasks such as curvature discrimination. To characterize them, we trained a classifier to identify either the stimulus identity or the mouse’s choice on each trial using the entire suite of sensorimotor variables (whisker position, contact timing and position, contact kinematics, etc.) that could potentially drive behavior, as well as task related variables that could also affect behavior (Fig. 1b). By increasing the complexity and richness of the set of features used to perform the classification of stimulus and choice, we identified what features were most informative to perform the task and what features were driving animal’s decision, respectively. We found that the cumulative number of contacts per trial for each whisker independently was informative about the stimulus and choice identity. Surprisingly, precise contact timings within a trial for the different whiskers was not an important feature in either case. Additionally, the exact angular position of each whisker during contacts was highly predictive of the stimulus identity, suggesting that the mice’s behavior was not fully optimal, since this same feature could not predict mice’s choice accurately on a trial-by-trial basis.

In order to identify how barrel cortex contributes to transforming fine-scale representations of sensory events into high-level representations of object identity, we recorded neural populations in mice performing this task. We fit a generalized linear model (GLM) to each neuron’s firing rate as a function of both sensorimotor (e.g., whisker motion and touch) and cognitive (e.g., reward history) variables (Fig. 1c). Neurons responded strongly to whisker touch and, perhaps more surprisingly for a sensory area, to whisker motion. We also observed widespread and unexpected encoding of reward history and choice.

In conclusion, these results show that mice recognize objects by integrating sensory information gathered by active sampling across whiskers. Moreover, we find that the barrel cortex encodes a myriad of sensory and task related variables, like contacts, motor exploration, and reward and choice history, challenging the classical view of barrel cortex as a purely sensory area.

P27 Identifying the neural circuits underlying optomotor control in larval zebrafish

Winnie Lai1, John Holman1, Paul Pichler1, Daniel Saska2, Leon Lagnado2, Christopher Buckley3

1University of Sussex, Department of Informatics, Brighton, United Kingdom; 2University of Sussex, Department of Neuroscience, Brighton, United Kingdom; 3University of Sussex, Falmer, United Kingdom

Correspondence: Winnie Lai (wl291@sussex.ac.uk)

BMC Neuroscience 2019, 20(Suppl 1):P27

Most locomotor behaviours require the brain to instantaneously coordinate a continuous flow of sensory and motor information. As opposed to the conventional open-loop approach in the realm of neuroscience, it has been proposed that the brain is better idealised as a closed-loop controller which regulates dynamical motor actions. Studying brain function on these assumptions remains largely unexplored until the recent emergence of imaging techniques, such as the SPIM, which allow brain-wide neural recording at cellular resolution and high speed during active behaviours. Concurrently, larval zebrafish is becoming a powerful model organism in neuroscience due to their great optical accessibility and robust sensorimotor behaviours. Here, we apply control theory in engineering to investigate the neurobiological basis of the optomotor response (OMR), a body reflex to stabilise optic flow in the presence of whole-field visual motion, in larval zebrafish.

Our group recently developed a collection of OMR models based on variations of proportional-integral controllers. Whilst the proportional term allows rapid response to disturbance, the integral term eliminates the steady-state error over time. We will begin by characterising OMR adaption with respect to different speeds and heights, in both free-swimming and head-restrained environments. Data collected will be used to determine which model best captures zebrafish behaviour. Next, we will conduct functional imaging of fictively behaving animals under a SPIM that our group constructed, in an effort to examine how the control mechanism underpinning OMR is implemented and distributed in the neural circuitry of larval zebrafish. This research project will involve evaluating and validating biological plausible models inspired by control theory, as well as quantifying and analysing large behavioural and calcium imaging data sets. Understanding the dynamical nature of brain function for successful OMR control in larval zebrafish can provide unique insight into the neuropathology of diseases with impaired movement and/or offer potential design solutions for sophisticated prosthetics.

P28 A novel learning mechanism for interval timing based on time cells of hippocampus

Sorinel Oprisan1, Tristan Aft1, Mona Buhusi2, Catalin Buhusi2

1College of Charleston, Department of Physics and Astronomy, Charleston, SC, United States of America; 2Utah State University, Department of Psychology, Logan, UT, United States of America

Correspondence: Sorinel Oprisan (oprisans@cofc.edu)

BMC Neuroscience 2019, 20(Suppl 1):P28

Time cells were recently discovered in the hippocampus and they seem to ramp-up their firing when the subject is at a specific temporal marker in a behavioral test. At cellular level, the spread of the firing interval, i.e. the width of the Gaussian-like activity, for each time cell is proportional to the time of the peak activity. Such a linear relationship is well-known at behavioral level and is called scalar property of interval timing.

We proposed a novel mathematical model for interval timing starting with a population of hippocampal time cells and a dynamic learning rule. We hypothesized that during the reinforcement trials the subject learns the boundaries of the temporal duration. Subsequently, a population of time cells is recruited and coverers the entire to-be-timed duration. At this stage, the population of time cells simply produces a uniform average time field since all time cells contribute equally to the average. We hypothesized that dopamine could modulate the activity of time cells during reinforcement trials by enhancing/depressed their activity. Our numerical simulations of the model agree with behavioral experiments.

Acknowledgments: We acknowledge the support of R&D grant from the College of Charleston and support for a Palmetto Academy site from the South Carolina Space Grant Consortium.

References

- 1.Oprisan SA, Aft T, Buhusi M, Buhusi CV. Scalar timing in memory: A temporal map in the hippocampus. Journal of Theoretical Biology 2018, 438:133–142.

- 2.Oprisan SA, Buhusi M, Buhusi CV. A Population-Based Model of the Temporal Memory in the Hippocampus. Frontiers in Neuroscience 2018, 12:521.

No hay comentarios:

Publicar un comentario