P5 Computational modeling of genetic contributions to excitability and neural coding in layer V pyramidal cells: applications to schizophrenia pathology

Tuomo Mäki-Marttunen1, Gaute Einevoll2, Anna Devor3, William A. Phillips4, Anders M. Dale3, Ole A. Andreassen5

1Simula Research Laboratory, Oslo, Norway; 2Norwegian University of Life Sciences, Faculty of Science and Technology, Aas, Norway; 3University of California, San Diego, Department of Neurosciences, La Jolla, United States of America; 4University of Stirling, Psychology, Faculty of Natural Sciences, Stirling, United Kingdom; 5University of Oslo, NORMENT, KG Jebsen Centre for Psychosis Research, Division of Mental Health and Addiction, Oslo, Norway

Correspondence: Tuomo Mäki-Marttunen (tuomo@simula.no)

BMC Neuroscience 2019, 20(Suppl 1):P5

Layer V pyramidal cells (L5PCs) extend their apical dendrites throughout the cortical thickness of the neocortex and integrate information from local and distant sources [1]. Alterations in the L5PC excitability and its ability to process context- and sensory drive-dependent inputs have been proposed to be a cause for hallucinations and other impairments of sensory perceptions related to mental disease [2]. In line with this hypothesis, genetic variants in voltage-gated ion channel-encoding genes and their altered expression have been associated with the risk of mental disorders [4]. In this work, we use computational models of L5PCs to systematically study the impact of small-effect variants on L5PC excitability and phenotypes associated with schizophrenia (SCZ).

An important aid in SCZ research is the set of biomarkers and endophenotypes that reflect the impaired neurophysiology and—unlike most of the symptoms of the disorder—are translatable to animal models. The deficit in prepulse inhibition (PPI) is one of the most robust endophenotypes. Although statistical genetics and genome-wide association studies (GWASs) have helped to make associations between gene variants and disease phenotypes, the mechanisms of PPI deficits and other circuit dysfunctions related to SCZ are incompletely understood at the cellular level. Following our previous work [3], we here study the effects ofSCZ-associated genes on PPI in a single neuron.

In this work, we aim at bridging the gap of knowledge between SCZ genetics and disease phenotypes by using biophysically detailed models to uncover the influence of SCZ-associated genes on integration of information in L5PCs. L5PC population displays a wide diversity of morphological and electrophysiological behaviours, which has been overlooked in most modeling studies. To capture this variability, we use two separate models for thick-tufted L5PCs with partly overlapping ion-channel mechanisms and modes of input-output relationships. Furthermore, we generate alternative models that capture a continuum of firing properties between those attained by the two models. We show that most of the effects of SCZ-associated variants reported in [3] are robust across different types of L5PCs. Further, to generalize the results to in vivo-like conditions, we show that the effects of these model variants on single-L5PC excitability and integration of inputs persist when the model neuron is stimulated with noisy inputs. We also show that the model variants alter the way L5PCs code the input information both in terms of output action potentials and intracellular [Ca2+], which could contribute to both altered activity in the downstream neurons and synaptic long-term potentiation. Taken together, our results show a wide diversity in how SCZ-associated voltage-gated ion channel-encoding genes affect input-output relationships in L5PCs, and our framework helps to predict how these relationships are correlated with each other. These findings indicate that SCZ-associated variants may alter the interaction between perisomatic and apical dendritic regions.

References

- 1.Hay E, Hill S, Schürmann F, Markram H, Segev I. Models of neocortical layer 5b pyramidal cells capturing a wide range of dendritic and perisomatic active properties. PLoS Comput Biol 7, 7(2011): e1002107.

- 2.Larkum M. A cellular mechanism for cortical associations: an organizing principle for the cerebral cortex. Trends in Neurosciences 36, 3(2013): 141–151.

- 3.Mäki-Marttunen T, Halnes G, Devor A, et al. Functional effects of schizophrenia-linked genetic variants on intrinsic single-neuron excitability: a modeling study. Biol Psychiatry: Cogn Neurosci Neuroim 1, 1(2016): 49–59.

- 4.Ripke S, Neale BM, Corvin A, Walters JT, et al. Biological insights from 108 schizophrenia-associated genetic loci. Nature 511, 7510(2014): 421.

P6 Spatiotemporal dynamics underlying successful cognitive therapy for posttraumatic stress disorder

Marina Charquero1, Morten L Kringelbach1, Birgit Kleim2, Christian Ruff3, Steven C.R Williams4, Mark Woolrich5, Vidaurre Diego5, Ehlers Anke6

1University of Oxford, Department of Psychiatry, Oxford, United Kingdom; 2University of Zurich, Psychotherapy and Psychosomatics, Zurich, Switzerland; 3University of Zurich, Zurich Center for Neuroeconomics (ZNE), Department of Economics, Zurich, Switzerland; 4King’s College London, Neuroimaging Department, London, United Kingdom; 5University of Oxford, Wellcome Trust Centre for Integrative NeuroImaging, Oxford Centre for Human Brain Activity (OHBA), Oxford, United Kingdom; 6University of Oxford, Oxford Centre for Anxiety Disorders and Trauma, Department of Experimental Psychology, Oxford, United Kingdom

Correspondence: Marina Charquero (marina.charqueroballester@psych.ox.ac.uk)

BMC Neuroscience 2019, 20(Suppl 1):P6

Cognitive therapy for posttraumatic stress disorder (CT-PTSD) is one of the evidence-based psychological treatments. However, there are currently no fMRI studies investigating the temporal dynamics of brain network activation associated with successful cognitive therapy for PTSD. In this study, we used a newly developed data-driven approach to investigate the dynamics of brain function [1] underlying PTSD recovery with CT-PTSD [2].

Participants (43 PTSD, 30 remitted (14 pre & post CT-PTSD, 16 only post CT-PTSD), 8 waiting list and 15 healthy controls) underwent an fMRI protocol on a 1.5T Siemens Scanner using an echoplanar protocol (TR/TE 2400/40). The task consisted of trauma-related or neutral pictures presented in a semi-randomised block design. Data was preprocessed using FSL and FIX and nonlinearly registered to MNI space. Mean BOLD timeseries were estimated using the Shen functional atlas [3]. A Hidden Markov Model [1] was applied to estimate 7 states, each defined by a certain pattern of activation. The amount of total time spent in each network state (i.e., fractional occupancy) was computed separately for each of the two conditions: neutral and trauma-related pictures.

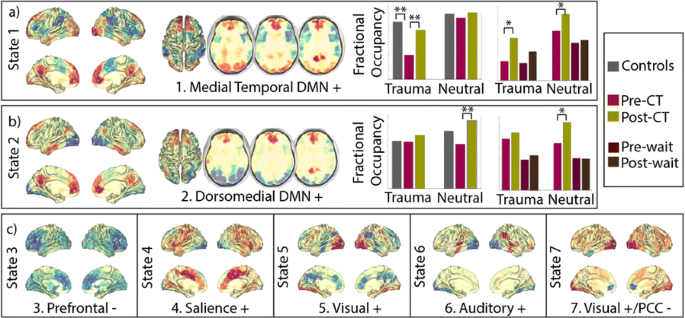

The states can be described as patterns of above- and below-average activation overlapping with functional (e.g., visual ventral stream) or resting-state networks (e.g., default mode network (DMN)). Results show that two DMN-related states, anatomically involving the medial temporal and the dorsomedial prefrontal DMN subsystems [4], had decreased fractional occupancies in PTSD in contrast to both healthy controls and remitted PTSD. No other states showed significant differences between groups. Importantly, there were no differences between PTSD before and after a waiting list condition (Fig 1). Furthermore, flashback qualities of intrusive memories were negatively related to the time spent in the medial temporal DMN as well as positively correlated with the time spent in ventral visual and salience states.

a, b Participants with PTSD spend less time visiting two DMN-related states in contrast to healthy controls and/or remitted PTSD, but no significant differences were found between visit1 and visit 2 of participants assigned to the waiting list condition. c No significant differences were found between groups for any of the other states. *pval < 0.05; **p 0.05 < after FDR

Recent work suggests that two subcomponents of the DMN, the medial temporal DMN and the dorsomedial prefrontal DMN, appear to be related to memory contextualisation and mentalizing about self and others, respectively [e.g. 4]. Our results show that the brains of participants with PTSD spend less time in states related to these two subcomponents before but not after successful therapy. This fits well with the cognitive theory suggested by [5], according to which PTSD results from: 1) disturbance of autobiographical memory characterised by poor contextualisation 2) excessively negative and threatening interpretations of one’s own and other people’s reactions to the trauma.

References

- 1.Vidaurre D, Abeysuriya R, Becker R, et al. Discovering dynamic brain networks from big data in rest and task. Neuroimage 2018 Oct 15;180:646–56.

- 2.Ehlers A, Clark DM, Hackmann A, McManus F, Fennell M. Cognitive therapy for post-traumatic stress disorder: development and evaluation. Behaviour research and therapy 2005 Apr 1;43(4):413–31.

- 3.Shen X, Tokoglu F, Papademetris X, Constable RT. Groupwise whole-brain parcellation from resting-state fMRI data for network node identification. Neuroimage 2013 Nov 15;82:403–15.

- 4.Andrews‐Hanna JR, Smallwood J, Spreng RN. The default network and self‐generated thought: component processes, dynamic control, and clinical relevance. Annals of the New York Academy of Sciences 2014 May 1;1316(1):29–52.

- 5.Ehlers A, Clark DM. A cognitive model of posttraumatic stress disorder. Behaviour research and therapy 2000 Apr 1;38(4):319–45.

P7 Experiments and modeling of NMDA plateau potentials in cortical pyramidal neurons

Peng Gao1, Joe Graham2, Wen-Liang Zhou1, Jinyoung Jang1, Sergio Angulo2, Salvador Dura-Bernal2, Michael Hines3, William W Lytton2, Srdjan Antic1

1University of Connecticut Health Center, Department of Neuroscience, Farmington, CT, United States of America; 2SUNY Downstate Medical Center, Department of Physiology and Pharmacology, Brooklyn, NY, United States of America; 3Yale University, Department of Neuroscience, CT, United States of America

Correspondence: Joe Graham (joe.w.graham@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):P7

Experiments have shown that application of glutamate near basal dendrites of cortical pyramidal neurons activates AMPA and NMDA receptors, which can result in dendritic plateau potentials: long-lasting depolarizations which spread into the soma, reducing the membrane time constant and bringing the cell closer to the spiking threshold. Utilizing a morphologically-detailed reconstruction of a Layer 5 pyramidal cell from prefrontal cortex, a Hodgkin-Huxley compartmental model was developed in NEURON. Synaptic AMPA/NMDA and extrasynaptic NMDA receptor models were placed on basal dendrites to explore plateau potentials. The properties of the model were tuned to match plateau potentials recorded by voltage-sensitive dye imaging in dendrites and whole-cell patch measurements in somata of prefrontal cortex pyramidal neurons from rat brain slices. The model was capable of reproducing experimental observations: a threshold for activation of the plateau, saturation of plateau amplitude with increasing glutamate application, depolarization of the soma by approximately 20 mV, and back-propagating action potential amplitude attenuation and time delay. The model predicted that membrane time constant is shortened during the plateau, that synaptic inputs are more effective during the plateau due to both depolarization and time constant change, the plateau durations are longer when activated by more distal dendritic segments, and that plateau initiation location can be predicted from somatic plateau amplitude. Dendritic plateaus induced by strong basilar dendrite stimulation can increase population synchrony produced by weak coherent stimulation in apical dendrites. The morphologically-detailed cell model was simplified while maintaining the observed plateau behavior and then utilized in cortical network models along with a previously-published inhibitory interneuron model. The network model simulations showed increased synchrony between cells during induced dendritic plateaus. These results support our hypothesis that dendritic plateaus provide a 200-500 ms time window during which a neuron is particularly excitable. At the network level, this predicts that sets of cells with simultaneous plateaus would provide an activated ensemble of responsive cells with increased firing. Synchronously spiking subsets of these cells would then create an embedded ensemble. This embedded ensemble would demonstrate a temporal code, at the same time as the activated (embedded) ensemble showed rate coding.

P8 Systematic automated validation of detailed models of hippocampal neurons against electrophysiological data

Sára Sáray1, Christian A Rössert2, Andrew Davison3, Eilif Muller2, Tamas Freund4, Szabolcs Kali4, Shailesh Appukuttan3

1Faculty of Information Technology and Bionics, Pázmány Péter Catholic University, Hungary; 2École Polytechnique Fédérale de Lausanne, Blue Brain Project, Lausanne, Switzerland; 3Centre National de la Recherche Scientifique/Université Paris-Sud, Paris-Saclay Institute of Neuroscience, Gif-sur-Yvette, France; 4Institute of Experimental Medicine, Hungarian Academy of Sciences, Budapest, Hungary

Correspondence: Sára Sáray (saraysari@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):P8

Developing biophysically and anatomically detailed data-driven computational models of the different neuronal cell types and running simulations on them is becoming a more and more popular method in the neuroscience community to investigate the behaviour and to understand or predict the function of these neurons in the brain. Several computational and software tools have been developed to build detailed neuronal models, and there is an increasing body of experimental data from electrophysiological measurements that describe the behavior of real cell neurons and thus constrain the parameters of detailed neuronal models. As a result, there are now a large number of different models of many cell types available in the literature.

These published models were usually built to capture some important or interesting properties of the given neuron type, i.e., to reproduce the results of a few selected experiments, and it is often unknown, even by their developers, how they would behave in other situations, outside their original context. Nevertheless, for data-driven models to be predictive, it is important that they are able to generalize beyond their original scope. Furthermore, investigating and developing different hippocampal CA1 pyramidal cell models we experienced that tuning the model parameters so that the model reproduces a specific behaviour often significantly changes previously adjusted behaviours, which can easily remain unrecognized by the modeler. This limits the reusability of these models for different scientific purposes. Therefore, it would be important to test and evaluate the models under different conditions, to explore the changes in model behaviour when its parameters are tuned.

To make it easier for the modeling community to explore the changes in model behavior during parameter tuning, and to systematically compare models of rat hippocampal CA1 pyramidal cells that were developed using different methods and for different purposes, we have developed an automated Python test suite called HippoUnit. HippoUnit is based on the SciUnit framework [1] which was developed for the validation of scientific models against experimental data. The tests of HippoUnit automatically run simulations on CA1 pyramidal cell models built in the NEURON simulator [2] that mimic the electrophysiological protocol from which the target experimental data were derived. Then the behavior of the model is evaluated and quantitatively compared to the experimental data using various feature-based error functions. Current validation tests cover somatic behavior and signal propagation and integration in apical dendrites of rat hippocampal CA1 pyramidal single cell models. The package is open source, available on GitHub (https://github.com/KaliLab/hippounit) and it has been integrated into the Validation Framework developed within the Human Brain Project.

Here we present how we applied HippoUnit to test and compare the behavior of several different hippocampal CA1 pyramidal cell models available on ModelDB [4], against electrophysiological data available in the literature. By providing the software tools and examples on how to validate these models, we hope to encourage the modeling community to use more systematic testing during model development, in order to create neural models that generalize better, and make the process of model building more reproducible and transparent.

References

- 1.Omar C, Aldrich J, Gerkin RC. Collaborative infrastructure for test-driven scientific model validation. In Companion Proceedings of the 36th International Conference on Software Engineering 2014 May 31 (pp. 524–527). ACM.

- 2.Carnevale NT, Hines M. The NEURON Book. Cambridge, UK: Cambridge University Press; 2006.

- 3.Druckmann S, Banitt Y, Gidon AA, Schürmann F, Markram H, Segev I. A novel multiple objective optimization framework for constraining conductance-based neuron models by experimental data. Frontiers in neuroscience 2007 Oct 15;1:1.

- 4.McDougal RA, Morse TM, Carnevale T, et al. Twenty years of ModelDB and beyond: building essential modeling tools for the future of neuroscience. Journal of computational neuroscience 2017 Feb 1;42(1):1–0.

- 5.Appukuttan S, Garcia PE, Sharma BL, Sáray S, Káli S, Davison AP. Systematic Statistical Validation of Data-Driven Models in Neuroscience. Program No. 524.04. 2018 Neuroscience Meeting Planner San Diego, CA: Society for Neuroscience, 2018. Online.

P9 Systematic integration of experimental data in biologically realistic models of the mouse primary visual cortex: Insights and predictions

Yazan Billeh1, Binghuang Cai2, Sergey Gratiy1, Kael Dai1, Ramakrishnan Iyer1, Nathan Gouwens1, Reza Abbasi-Asl2, Xiaoxuan Jia3, Joshua Siegle1, Shawn Olsen1, Christof Koch1, Stefan Mihalas1, Anton Arkhipov1

1Allen Institute for Brain Science, Modelling, Analysis and Theory, Seattle, WA, United States of America; 2Allen Institute for Brain Science, Seattle, WA, United States of America; 3Allen Institute for Brain Science, Neural Coding, Seattle, WA, United States of America

Correspondence: Yazan Billeh (yazanb@alleninstitute.org)

BMC Neuroscience 2019, 20(Suppl 1):P9

Data collection efforts in neuroscience are growing at an unprecedented pace, providing a constantly widening stream of highly complex information about circuit architectures and neural activity patterns. We leverage these data collection efforts to develop data-driven, biologically realistic models of the mouse primary visual cortex at two levels of granularity. The first model uses biophysically detailed neuron models with morphological reconstructions fit to experimental data. The second uses Generalized Leaky Integrate and Fire point neuron models fit to the same experimental recordings. Both models were developed using the Brain Modeling ToolKit (BMTK) and will be made freely available upon publication. We demonstrate how in the process of building these models, specific predictions about structure-function relationships in the mouse visual cortex emerge. We discuss three such predictions regarding connectivity between excitatory and non-parvalbumin expressing interneurons; functional specialization of connections between excitatory neurons; and the impact of the cortical retinotopic map on neuronal properties and connections.

P10 Small-world networks enhance the inter-brain synchronization

Kentaro Suzuki1, Jihoon Park2, Yuji Kawai2, Minoru Asada2

1Osaka University, Graduate School of Engineering, Minoh City, Japan; 2Osaka University, Suita, Osaka, Japan

Correspondence: Kentaro Suzuki (kentaro.suzuki@ams.eng.osaka-u.ac.jp)

BMC Neuroscience 2019, 20(Suppl 1):P10

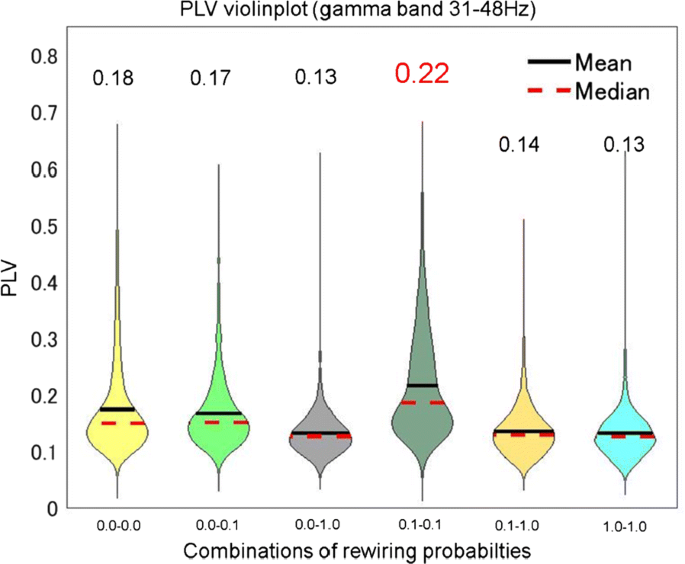

Many hyperscanning studies have shown that activities of the two brains often synchronize during social interaction (e.g., [1]). This synchronization occurs in various frequency bands and brain regions [1]. Further, Dumas et al. [2] constructed a two-brain model in which Kuramoto oscillators, as brain regions, are connected according to an anatomically realistic human connectome. They showed that the model with the realistic brain structure exhibits stronger inter-brain synchronization than the network with a randomly shuffled structure. However, it remains unclear what properties in the brain anatomical structure contribute to the inter-brain synchronization. Furthermore, since Kuramoto oscillators tend to converge to a specific frequency, the model cannot explain the synchronous activities in different frequency bands which were observed in the hyperscanning studies. In the current study, we propose a two-brain model based on small-world networks proposed by Watts and Strogatz method (WS method) [3] to systematically investigate the relationship between the small-world structure and the degree of inter-brain synchronization. WS method can control the clustering coefficient and shortest path length without changing the number of connections by rewiring probability p (p = 0.0: regular network, p = 0.1: small-world network, and p = 1.0: random network). We hypothesize that the small-world network, which has high clustering coefficient and low shortest path length, is responsible for the inter-brain synchronization owing to its efficient information transmission. The model consists of two networks, each of which network consists of 100 neuron groups composed by 1000 spiking neurons (800 excitatory and 200 inhibitory neurons). The neuron groups in a network are connected according to WS method. Some groups in the two networks are directly connected as inter-brain connectivity, which is in the same manner as the previous model [2]. We evaluated the inter-brain synchronization between neuron groups using Phase Locking Value (PLV). Fig. 1 shows PLVs in each combination of networks with different rewiring probabilities in the gamma band (31-48Hz). The mean PLV of the combination of small-world networks was higher than those of the other combinations.

PLVs between the networks in gamma band (31–48Hz), where a higher value indicates stronger synchronization. X-axis indicates the combinations of values of rewiring probability p (p = 0.0: regular network, p = 0.1: small-world network, and p = 1.0: random network). Black lines and red broken lines indicate the mean and the median of the PLVs, respectively

The result implies that the small-world structure in the brains may be a key factor of the inter-brain synchronization. As a future direction, we plan to impose an interaction task on the current model instead of the direct connections to aim to understand the relationship between the social interaction and structure properties of the brains.

Acknowledgments: This work was supported by JST CREST Grant Number JPMJCR17A4, and a project commissioned by the New Energy and Industrial Technology Development Organization (NEDO).

References

- 1.Dumas G, Nadel J, Soussignan R, Martinerie J, Garnero L. Inter-brain synchronization during social interaction. PloS one 2010 Aug 17;5(8):e12166.

- 2.Dumas G, Chavez M, Nadel J, Martinerie J. Anatomical connectivity influences both intra-and inter-brain synchronizations. PloS one 2012 May 10;7(5):e36414.

- 3.Watts DJ, Strogatz SH. Collective dynamics of ‘small-world’ networks. Nature 1998 Jun;393(6684):440.

P11 A potential mechanism for phase shifts in grid cells: leveraging place cell remapping to introduce grid shifts

Zachary Sheldon1, Ronald DiTullio2, Vijay Balasubramanian2

1University of Pennsylvania, Philadelphia, PA, United States of America; 2University of Pennsylvania, Computational Neuroscience Initiative, Philadelphia, United States of America

Correspondence: Zachary Sheldon (zsheldon@sas.upenn.edu)

BMC Neuroscience 2019, 20(Suppl 1):P11

Spatial navigation is a crucial part of survival, allowing an agent to effectively explore environments and obtain necessary resources. It has been theorized that this is achieved by learning an internal representation of space, known as a cognitive map. Multiple types of specialized neurons in the hippocampal formation and entorhinal cortex are believed to contribute to the formation of this cognitive map, particularly place cells and grid cells. These cells exhibit unique spatial firing fields that change in response to changes in environmental conditions. In particular, place cells display remapping of their spatial firing fields across different environments and grid cell display a phase shift in their spatial firing fields. If these cell types are indeed important for spatial navigation, we want to be able to explain the mechanism behind how the firing fields of these cell types change between environments. However, there are currently no suggested models or mechanisms for how this remapping and phase shift occur. Building off of previous work using continuous attractor network (CAN) models of grid cells, we propose a CAN model that incorporates place cell input to grid cells. By allowing for Hebbian learning between place cells and grid cells associated with two distinct environments, our model is able to replicate the phase shifts between environments observed in grid cells. Our model posits the first potential mechanism by which the cognitive map changes between environments, and will hopefully inspire new research into this phenomenon and spatial navigation as a whole.

P12 Computational modeling of seizure spread on a cortical surface explains the theta-alpha electrographic pattern

Viktor Sip1, Viktor Jirsa1, Maxime Guye2, Fabrice Bartolomei3

1Aix-Marseille Universite, Institute de Neurosciences, Marseille, France; 2Aix-Marseille Université, Centre de Résonance Magnétique Biologique et Médicale, Marseille, France; 3Assistance Publique - Hôpitaux de Marseille, Service de Neurophysiologie Clinique, Marseille, France

Correspondence: Viktor Sip (viktor.sip@univ-amu.fr)

BMC Neuroscience 2019, 20(Suppl 1):P12

Intracranial electroencephalography is a standard tool in clinical evaluation of patients with focal epilepsy. Various early electrographic seizure patterns differing in frequency, amplitude, and waveform of the oscillations are observed in intracranial recordings. The pattern most common in the areas of seizure propagation is the so-called theta-alpha activity (TAA), whose defining features are oscillations in the theta-alpha range and gradually increasing amplitude. A deeper understanding of the mechanism underlying the generation of the TAA pattern is however lacking. We show by means of numerical simulation that the features of the TAA pattern observed on an implanted depth electrode in a specific epileptic patient can be plausibly explained by the seizure propagation across an individual folded cortical surface.

In order to demonstrate this, we employ following pipeline: First, the structural model of the brain is reconstructed from the T1-weighted images, and the position of the electrode contact are determined using the CT scan with implanted electrodes. Next, the patch of cortical surface in the vicinity of the electrode of interest is extracted. On this surface, the simulation of the seizure spread is performed using The Virtual Brain framework. As a mathematical model a field version of the Epileptor model is employed. The simulated source activity is then projected to the sensors using the dipole model, and this simulated stereo-electroencephalographic signal is compared with the recorded one.

The results show that the simulation on the patient-specific cortical surface gives a better fit between the recorded and simulated signals than the simulation on generic surrogate surfaces. Furthermore, the results indicate that the spectral content and dynamical features might differ in the source space of the cortical gray matter activity and among the intracranial sensors, questioning the previous approaches to classification of seizure onset patterns done in the sensor space, both based on spectral content and on dynamical features.

In conclusion, we demonstrate that the investigation of the seizure dynamics on the level of cortical surface can provide deeper insight into the large scale spatiotemporal organization of the seizure. At the same time, it highlights the need for a robust technique for inversion of the observed activity from sensor to source space that would take into account the complex geometry of the cortical sources and the position of the intracranial sensors.

References

- 1.Perucca P, Dubeau F, Gotman J. Intracranial electroencephalographic seizure-onset patterns: effect of underlying pathology. Brain 2014, 137, 183–196.

- 2.Sanz Leon P, Knock SA, Woodman MM, et al. The Virtual Brain: a simulator of primate brain network dynamics. Frontiers in Neuroinformatics 2013, Jun 11;7:10.

- 3.Jirsa V, Stacey W, Quilichini P, Ivanov A, Bernard C. On the nature of seizure dynamics. Brain 2014, 137, 2110–2113.

- 4.Proix T, Jirsa VK, Bartolomei F, Guye M, Truccolo W. Predicting the spatiotemporal diversity of seizure propagation and termination in human focal epilepsy. Nature Communications 2018, Mar 14;9(1):1088.

P13 Bistable firing patterns: one way to understand how epileptic seizures are triggered

Fernando Borges1, Paulo Protachevicz2, Ewandson Luiz Lameu3, Kelly Cristiane Iarosz4, Iberê Caldas4, Alexandre Kihara1, Antonio Marcos Batista5

1Federal University of ABC, Center for Mathematics, Computation, and Cognition., São Bernardo do Campo, Brazil; 2State University of Ponta Grossa, Graduate in Science Program, Ponta Grossa, Brazil; 3National Institute for Space Research (INPE), LAC, São José dos Campos, Brazil; 4University of São Paulo, Institute of Physics, São Paulo, Brazil; 5State University of Ponta Grossa, Program of Post-graduation in Science, Ponta Grossa, Brazil

Correspondence: Fernando Borges (fernandodasilvaborges@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):P13

Excessively high, neural synchronisation has been associated with epileptic seizures, one of the most common brain diseases worldwide. Previous researchers have argued which epileptic and normal neuronal activity are support by the same physiological structure. However, to understand how neuronal systems transit between these regimes is a wide question to be answered. In this work, we study neuronal synchronisation in a random network where nodes are neurons with excitatory and inhibitory synapses, and neural activity for each node is provided by the adaptive exponential integrate-and-fire model. In this framework, we verify that the decrease in the influence of inhibition can generate synchronisation originating from a pattern of desynchronised spikes. The transition from desynchronous spikes to synchronous bursts of activity, induced by varying the synaptic coupling, emerges in a hysteresis loop due to bistability where abnormal (excessively high synchronous) regimes exist. We verify that, for parameters in the bistability regime, a square current pulse can trigger excessively high (abnormal) synchronisation, a process that can reproduce features of epileptic seizures. Then, we show that it is possible to suppress such abnormal synchronisation by applying a small-amplitude external current on less than 10% of the neurons in the network. Our results demonstrate that external electrical stimulation not only can trigger synchronous behaviour, but more importantly, it can be used as a means to reduce abnormal synchronisation and thus, control or treat effectively epileptic seizures.

P14 Can sleep protect memories from catastrophic forgetting?

Oscar Gonzalez1, Yury Sokolov2, Giri Krishnan2, Maxim Bazhenov2

1University of California, San Diego, Neurosciences, La Jolla, CA, United States of America; 2University of California, San Diego, Medicine, La Jolla, United States of America

Correspondence: Oscar Gonzalez (o2gonzalez@ucsd.edu)

BMC Neuroscience 2019, 20(Suppl 1):P14

Previously encoded memories can be damaged by encoding of new memories, especially when they are relevant to the new data and hence can be disrupted by new training—a phenomenon called “catastrophic forgetting”. Human and animal brains are capable of continual learning, allowing them to learn from past experience and to integrate newly acquired information with previously stored memories. A range of empirical data suggest important role of sleep in consolidation of recent memories and protection of the past knowledge from catastrophic forgetting. To explore potential mechanisms of how sleep can enable continual learning in neuronal networks, we developed a biophysically-realistic thalamocortical network model where we could train multiple memories with different degree of interference. We found that in a wake-like state of the model, training of a “new” memory that overlaps with previously stored “old” memory results in degradation of the old memory. Simulating NREM sleep state immediately after new learning led to replay of both old and new memories—this protected old memory from forgetting and ultimately enhanced both memories. The effect of sleep was similar to the interleaved training of the old and new memories. The study revealed that the network slow-wave oscillatory activity during simulated deep sleep leads to a complex reorganization of the synaptic connectivity matrix that maximizes separation between groups of synapses responsible for conflicting memories in the overlapping population of neurons. The study predicts that sleep may play a protective role against catastrophic forgetting and enables brain networks to undergo continual learning.

P15 Predicting the distribution of ion-channels in single neurons using compartmental models.

Roy Ben-Shalom1, Kyung Geun Kim2, Matthew Sit3, Henry Kyoung3, David Mao3, Kevin Bender1

1University of California, San-Francisco, Neurology, San-Francisco, CA, United States of America; 2University of California, Berkeley, EE/CS, Berkeley, CA, United States of America; 3University of California, Berkeley, Computer Science, Berkeley, United States of America

Correspondence: Roy Ben-Shalom (bens.roy@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):P15

Neuronal activity arises from the concerted activity of different ionic currents that are distributed in varying densities across different neuronal compartments, including the axon, soma, and dendrite. One major challenge in understanding neuronal excitability remains understanding precisely how different ionic currents are distributed in neurons. Biophysically detailed neuronal compartmental models allow us to distribute the channels along the morphology of a neuron and simulate resultant voltage responses. One can then use optimization algorithms that fit model’s responses to the neuronal recordings to predict the channels distributions for the model. The quality of predictions generated from such models depends critically on the biophysical accuracy of the model. Depending on how optimization is implemented—both mathematically and experimentally—one can arrive at several solutions that all reasonably fit empirical datasets. However, to generate predictions that can be validated in experiments we need to reach a unique solution that predicts the neuronal activity for a rich repertoire of experimental conditions. As we increase the size of an empirical dataset, the number of model solutions that can accurately account for these empirical observations decreases, theoretically arriving at one unique solution. Here we present a novel approach designed to identify this unique solution in a multi-compartmental model by fitting models to data obtained from a somatic neuronal recording and post-hoc morphological reconstruction. To validate this approach, we began by reverse engineering a classic model of a neocortical pyramidal cell developed by [1], which contains 12 free parameters describing ion channels distributed across dendritic, somatic, and axonal compartments. First, we used the original values of these free parameters (e.g., the target data) to create a dataset of voltage responses that represents a ground truth. Given this target dataset, our goal was to determine whether we could use optimization to arrive at similar parameter values when these values were unknown. We tested over 350 different stimulation protocols and 15 score functions, which compare the simulated data to the ground truth dataset, to determine which combination of stimulation and score functions creates datasets that reliably constrain the model. Then we checked how sensitive each parameter was to different score functions. We found that five of the twelve parameters were sensitive to many different score functions. While these five could be constrained, the other seven parameters were sensitive only to a small set of score functions. We therefore divided the remaining optimization process to several steps, iteratively constraining a subset of the parameters that were sensitive to the same stimulation protocols and score functions. With this approach, were able to constrain 11/12 of the parameters of the model and recover the original values. This suggests that iterative, sensitivity analysis-based optimization could allow for more accurate fitting of model parameters to empirical data. We are currently testing whether similar methods can be used on more recently developed models with more free parameters. Ultimately, our goal is to apply this method to empirical recordings of neurons in acute slice and in vivo conditions.

Reference

- 1.Mainen ZF, Sejnowski TJ. Influence of dendritic structure on firing pattern in model neocortical neurons. Nature 1996 Jul;382(6589):363.

P16 The contribution of dendritic spines to synaptic integration and plasticity in hippocampal pyramidal neurons

Luca Tar1, Sára Sáray2, Tamas Freund1, Szabolcs Kali1, Zsuzsanna Bengery2

1Institute of Experimental Medicine, Hungarian Academy of Sciences, Budapest, Hungary; 2Faculty of Information Technology and Bionics, Pázmány Péter Catholic University, Hungary

Correspondence: Luca Tar (luca.tar04@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):P16

The dendrites of cortical pyramidal cells bear spines which receive most of the excitatory synaptic input, act as separate electrical and biochemical compartments, and play important roles in signal integration and plasticity. In this study, we aimed to develop fully active models of hippocampal pyramidal neurons including spines to analyze the contributions of nonlinear processes in spines and dendrites to signal integration and synaptic plasticity. We also investigated ways to reduce the computational complexity of models of spiny neurons without altering their functional properties.

As a first step, we built anatomically and biophysically detailed models of CA1 pyramidal neurons without explicitly including dendritic spines. The models took into account multiple attributes of the cell determined by experiments, including the biophysics and distribution of ion channels, as well as the different electrophysiological characteristics of the soma and the dendrites. For systematic model development, we used two software tools developed in our lab: Optimizer [2] for automated parameter fitting, and the HippoUnit package, based on SciUnit [3] modules, to validate these results. We gradually increased the complexity of our model, mainly by adding further types of ion channels, and monitored the ability of the model to capture both optimized and non-optimized features and behaviors. This method allowed us to determine the minimal set of mechanisms required to replicate particular neuronal behaviors and resulted in a new model of CA1 pyramidal neurons whose characteristics match a wide range of experimental results.

Next, starting from a model which matched the available data on nonlinear dendritic integration [5], we added dendritic spines and moved excitatory synapses to the spine head. Simply adding the spines to the original model significantly changed the propagation of signals in dendrites, the properties of dendritic spikes and the overall characteristics of synaptic integration. This was due mainly to the effective change in membrane capacitance and the density of voltage-gated and leak conductances, and could be compensated by appropriate changes in these parameters. The resulting model showed the correct behavior for nonlinear dendritic integration while explicitly implementing all dendritic spines.

As the effects of spines on dendritic spikes and signal propagation could be largely explained by their effect on the membrane capacitance and conductance, we also developed a simplified version of the model where only those dendritic spines which received synaptic input were explicitly modeled, while the rest of the spines were implicitly taken into account by appropriate changes in the membrane properties. This model behaved very similarly to the one where all spines were explicitly modeled, but ran significantly faster. Our approach generalizes the F-factor method of [4] to active models.

Finally, our models which show realistic electrical behavior in their dendrites and spines allow us to examine Ca dynamics in dendritic spines in response to any combination of synaptic inputs and somatic action potentials. In combination with models of the critical molecular signaling pathways [1], this approach enables a comprehensive computational investigation of the mechanisms underlying activity-dependent synaptic plasticity in hippocampal pyramidal neurons.

References

- 1.Lindroos R, Dorst MC, Du K, et al. Basal Ganglia Neuromodulation Over Multiple Temporal and Structural Scales—Simulations of Direct Pathway MSNs Investigate the Fast Onset of Dopaminergic Effects and Predict the Role of Kv4. 2. Frontiers in neural circuits 2018 Feb 6;12:3.

- 2.Friedrich P, Vella M, Gulyás AI, Freund TF, Káli S. A flexible, interactive software tool for fitting the parameters of neuronal models. Frontiers in neuroinformatics 2014 Jul 10;8:63.

- 3.Omar C, Aldrich J, Gerkin RC. Collaborative infrastructure for test-driven scientific model validation. In Companion Proceedings of the 36th International Conference on Software Engineering 2014 May 31 (pp. 524–527). ACM.

- 4.Rapp M, Yarom Y, Segev I. The impact of parallel fiber background activity on the cable properties of cerebellar Purkinje cells. Neural Computation 1992 Jul;4(4):518–33.

- 5.Losonczy A, Magee JC. Integrative properties of radial oblique dendrites in hippocampal CA1 pyramidal neurons. Neuron 2006 Apr 20;50(2):291–307.

P17 Modelling the dynamics of optogenetic stimulation at the whole-brain level

Giovanni Rabuffo1, Viktor Jirsa1, Francesca Melozzi1, Christophe Bernard1

1Aix-Marseille Université, Institut de Neurosciences des Systèmes, Marseille, France

Correspondence: Giovanni Rabuffo (giovanni.rabuffo@univ-amu.fr)

BMC Neuroscience 2019, 20(Suppl 1):P17

Deep brain stimulation is commonly used in different pathological conditions, such as Parkinson’s disease, epilepsy, and depression. However, there is scant knowledge regarding the way of stimulating the brain to cause a predictable and beneficial effect. In particular, the choice of the area to stimulate and the stimulation settings (amplitude, frequency, duration) remain empirical [1].

To approach these questions in a theoretical framework, an understanding of how stimulation propagates and influences the global brain dynamics is of primary importance.

A precise stimulation (activation/inactivation) of specific cell-types in brain regions of interest can be obtained using optogenetic methods. Such stimulation will act in a short-range domain i.e., local in the brain region, as well as on a large-scale network. Both these effects are important to understand the final outcome of the stimulation [2]. Therefore, a whole brain approach is required.

In our work we use The Virtual Brain platform to model an optogenetic stimulus and to study its global effects on a “virtual” mouse brain [3]. The parameters of our model can be gauged in order to account for the intensity of the stimulus, which is generally controllable during experimental tests.

The functional activity of the mouse brain model can be compared to experimental evidences coming from in vivo optogenetic fMRI (ofMRI) [4]. In silico exploration of the parameter space allows then to fit the results of an ofMRI dataset as well as to make predictions on the outcome of a stimulus depending not only by its anatomical location and cell-type, but also by the connection topology.

The theoretical study of the network dynamics emerging from such adjustable and traceable stimuli, provides a step forward in the understanding of the causal relation between structural and functional connectomes.

References

- 1.Sironi VA. Origin and evolution of deep brain stimulation. Frontiers in integrative neuroscience 2011 Aug 18;5:42.

- 2.Fox MD, Buckner RL, Liu H, Chakravarty MM, Lozano AM, Pascual-Leone A. Resting-state networks link invasive and noninvasive brain stimulation across diverse psychiatric and neurological diseases. Proceedings of the National Academy of Sciences 2014 Oct 14;111(41):E4367–75.

- 3.Melozzi F, Woodman MM, Jirsa VK, Bernard C. The virtual mouse brain: a computational neuroinformatics platform to study whole mouse brain dynamics. eNeuro 2017 May;4(3).

- 4.Lee JH, Durand R, Gradinaru V, et al. Global and local fMRI signals driven by neurons defined optogenetically by type and wiring. Nature 2010 Jun;465(7299):788.

P18 Investigating the effect of the nanoscale architecture of astrocytic processes on the propagation of calcium signals

Audrey Denizot1, Misa Arizono2, Weiliang Chen3, Iain Hepburn3, Hédi Soula4, U. Valentin Nägerl2, Erik De Schutter3, Hugues Berry5

1INSA Lyon, Villeurbanne, France; 2Université de Bordeaux, Interdisciplinary Institute for Neuroscience, Bordeaux, France; 3Okinawa Institute of Science and Technology, Computational Neuroscience Unit, Onna-Son, Japan; 4University of Pierre and Marie Curie, INSERM UMRS 1138, Paris, France; 5INRIA, Lyon, France

Correspondence: Audrey Denizot (audrey.denizot@inria.fr)

BMC Neuroscience 2019, 20(Suppl 1):P18

According to the concept of the ‘tripartite synapse’ [1], information processing in the brain results from dynamic communication between pre- and post- synaptic neurons and astrocytes. Astrocyte excitability results from transients of cytosolic calcium concentration. Local calcium signals are observed both spontaneously and in response to neuronal activity within fine astrocyte ramifications [2, 3], that are in close contact with synapses [4]. Those fine processes, that belong to the so-called spongiform structure of astrocytes, are too fine to be resolved spatially with conventional light microscopy [5, 6]. However, calcium dynamics in these structures can be investigated by computational modeling. In this study, we investigate the roles of the spatial properties of astrocytic processes on their calcium dynamics. Because of the low volumes and low number of molecules at stake, we use our stochastic spatially-explicit individual-based model of astrocytic calcium signals in 3D [7], implemented with STEPS [8]. We validate our model by reproducing key parameters of calcium signals that we have recorded with high-resolution calcium imaging in organotypic brain slices. Our simulations reveal the importance of the spatial organization of the implicated molecular actors for calcium dynamics. Particularly, we predict that different spatial organizations can lead to very different types of calcium signals, even for two processes displaying the exact same calcium channels, with the same densities. We also investigate the impact of process geometry at the nanoscale on calcium signal propagation. By modeling realistic astrocyte geometry at the nanoscale, this study thus proposes plausible mechanisms for information processing within astrocytes as well as neuron-astrocyte communication.

References

- 1.Araque A, Parpura V, Sanzgiri RP, Haydon PG. Tripartite synapses: glia, the unacknowledged partner. Trends in neurosciences 1999 May 1;22(5):208–15.

- 2.Arizono M, et al. Structural Basis of Astrocytic Ca2+ Signals at Tripartite Synapses. Social Science Research Network 2018.

- 3.Bindocci E, Savtchouk I, Liaudet N, Becker D, Carriero G, Volterra A. Three-dimensional Ca2+ imaging advances understanding of astrocyte biology. Science 2017 May 19;356(6339):eaai8185.

- 4.Ventura R, Harris KM. Three-Dimensional Relationships between Hippocampal Synapses and Astrocytes. Journal of Neuroscience 1999, Aug 15;19(16): 6897–6906.

- 5.Heller JP, Rusakov DA. The nanoworld of the tripartite synapse: insights from super-resolution microscopy. Frontiers in Cellular Neuroscience 2017 Nov 24;11:374.

- 6.Panatier A, Arizono M, Nägerl UV. Dissecting tripartite synapses with STED microscopy. Phil Trans of the Royal Society B: Biological Sciences 2014 Oct 19;369(1654):20130597.

- 7.Audrey D, Misa A, Valentin NU, Hédi S, Hugues B. Simulation of calcium signaling in fine astrocytic processes: effect of spatial properties on spontaneous activity. bioRxiv 2019 Jan 1:567388.

- 8.Hepburn I, Chen W, Wils S, De Schutter E. STEPS: efficient simulation of stochastic reaction–diffusion models in realistic morphologies. BMC systems biology 2012 Dec;6(1):36.

P19 Neural mass modeling of the Ponto-Geniculo-Occipital wave and its neuromodulation

Kaidi Shao1, Nikos Logothetis1, Michel Besserve1

1MPI for Biological Cybernetics, Department for Physiology of Cognitive Processes, Tübingen, Germany

Correspondence: Kaidi Shao (kdshao@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):P19

As a prominent feature of Rapid Eye Movement (REM) sleep and the transitional stage from Slow Wave Sleep to REM sleep (the pre-REM stage), Ponto-Geniculo-Occipital (PGO) waves are hypothesized to play a critical role in dreaming and memory consolidation [1]. During pre-REM and REM stages, PGO waves appear in two subtypes differing in number, amplitude and frequency. However, the mechanisms underlying their generation and propagation across multiple brain structures, as well as their functions, remains largely unexplored. In particular, contrary to the multiple phasic events occurring during non-REM sleep (slow waves, spindles and sharp-wave ripples), computational modeling of PGO waves has to the best of our knowledge not yet been investigated.

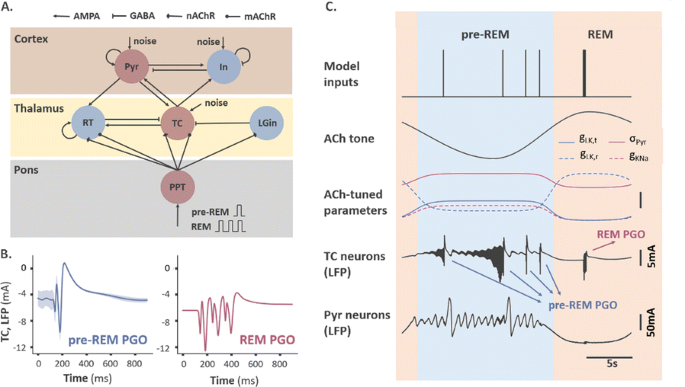

Based on experimental evidence in cats, the species were most extensively studied, we elaborated an existing thalamocortical model operating in the pre-REM stage [2], and constructed a ponto-thalamo-cortical neural mass model consisting of 6 rate-coded neuronal populations interconnected via biologically-verified synapses (Fig. 1A). Transient PGO-related activities are elicited by a single or multiple brief pulses, modelling the input bursts that PGO-triggering neurons send to cholinergic neurons in the pedunculopontine tegmentum nucleus (PPT). The effect of acetylcholine (ACh), as the primarily-affecting neuromodulator during the SWS-to-REM transition, was also modelled by tuning several critical parameters with tonically-varying ACh concentration.

a Model structure. TC: thalamocortical neurons. RT: reticular thalamic neurons. Pyr: pyramidal neurons. In: inhibitory neurons. LGin: thalamic interneurons. PPT: PGO-transferring neurons. b Typical waveforms of two subtypes of thalamic PGO waves. c Example traces of thalamic and cortical LFPs modulated by a cholinergic tone. Unscaled bar: 2 mV for red, 2 mS for dashed red, and 0.01 mS for others

Our simulations are able to reproduce deflections in local field potentials (LFPs), as well as other electrophysiological characteristics consistent in many respects with classical electrophysiological studies (Fig. 1B). For example, the duration of both subtypes of thalamic PGO waves matches that of the PGO recordings with a similar waveform comprised of a sharp negative peak and a slower positive peak. The bursting duration of TC and RT neurons (10ms, 25ms) falls in the range reported by experimental papers (7-15ms, 20-40ms). Consistent with experimental findings, the simulated PGO waves block spindle oscillations that occur during pre-REM stage. By incorporating tonic cholinergic neuromodulation to mimic the SWS-to-REM transition, we were also able to replicate the electrophysiological differences between the two PGO subtypes with an ACh-tuned leaky potassium conductance in TC and RT neurons (Fig. 1C).

These results help clarify the cellular mechanisms underlying thalamic PGO wave generation, e.g., the nicotinic depolarization of LGin neurons, whose role used to be under debate, is shown to be critical for the generation of the negative peak. The model elucidates how ACh modulates state transitions throughout the wake-sleep cycle, and how this modulation leads to a recently-reported difference of transient change in the thalamic multi-unit activities. The simulated PGO waves also provides us a biologically-plausible framework to investigate how they take part in the multifaceted brain-wide network phenomena occurring during sleep and the enduring effects they may induce through plasticity.

References

- 1.Gott JA, Liley DT, Hobson JA. Towards a functional understanding of PGO waves. Frontiers in human Neuroscience 2017 Mar 3;11:89.

- 2.Costa MS, Weigenand A, Ngo HV, et al. A thalamocortical neural mass model of the EEG during NREM Sleep and its response to auditory stimulation. PLoS computational biology 2016 Sep 1;12(9):e1005022.

No hay comentarios:

Publicar un comentario