P65 Hybrid modelling of vesicles with spatial reaction-diffusion processes in STEPS

Iain Hepburn, Sarah Nagasawa, Erik De Schutter

Okinawa Institute of Science and Technology, Computational Neuroscience Unit, Onna-son, Japan

Correspondence: Iain Hepburn (ihepburn@oist.jp)

BMC Neuroscience 2019, 20(Suppl 1):P65

Vesicles play a central role in many fundamental neuronal cellular processes. For example, pre-synaptic vesicles package, transport and release neurotransmitter, and post-synaptic AMPAR trafficking is controlled by the vesicular-endosomal pathway. Therefore, vesicle trafficking underlies crucial brain features such as the dynamics and strength of chemical synapses, yet vesicles have only received limited attention in computational neuronal modelling to now.

Molecular simulation software STEPS (steps.sourceforge.net) applies reaction-diffusion kinetics on realistic tetrahedral mesh structures by tracking the molecular population within tetrahedrons and modelling their local interactions stochastically. STEPS is usually applied to subcellular models such as synaptic plasticity pathways and so is a natural choice for extension to vesicle processes. However, combining vesicle modelling with mesh-based reaction-diffusion modelling poses a number of challenges.

The fundamental issue to solve is the interaction between spherical vesicle objects and the tetrahedral mesh. We apply an overlap library and track local vesicle-tetrahedron overlap, which allows us to modify local diffusion rates and model interactions between vesicular surface proteins and molecules in the tetrahedral mesh such as cytosolic and plasma membrane proteins as the vesicles sweep through the mesh. These interactions open up many modelling applications such as vesicle-endosome interaction, membrane-docking, priming and neurotransmitter release, all solved to a high level of spatial and biochemical detail.

This hybrid modelling, that includes dynamic vesicle processes and dependencies, presents challenges in ensuring accuracy whilst maintaining efficiency of the software, and this is an important focus of our work. Where possible we validate the accuracy of our modelling processes, for example by validating diffusion and binding rates. Optimisation efforts are ongoing but we have had some successes, for example by applying local updates to the dynamic vesicle processes.

We apply this new modelling technology to the post-synaptic AMPAR trafficking pathway. AMPA receptors undergo clatherin-dependent endocytosis and are trafficked to the endosome where they are sorted for either degradation or returned to the membrane via recycling vesicles. Rab GTPases coordinate sorting through the endosomal system.

Due to our new hybrid modelling technology it is possible to simulate this pathway, as well as potentially other areas of cell biology where vesicle trafficking and function play an important role, to high spatial detail. We hope that our current efforts and future additions open up new avenues of modelling research in neuroscience.

P66 A computational model of social motivation and effort

Ignasi Cos1, Gustavo Deco2

1Pompeu Fabra University, Center for Brain & Cognition, Barcelona, Spain; 2Universitat Pompeu Fabra, Barcelona, Spain

Correspondence: Ignasi Cos (ignasi.cos@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):P66

Although the relationship between motivation and behaviour has been extensively studied, the specifics of how motivation relates to movement and how effort is considered to select specific movements remains largely controversial. Indeed, moving towards valuable states implies investing a certain amount of effort and coming up with appropriate motor strategies. How are these principles modulated by social pressure?

To investigate whether and how motor parameters and decisions between movements were influenced by differentially induced motivated states, we performed a decision-making paradigm where healthy human participants made choices between reaching movements under different conditions. Their goal was to accumulate reward by selecting one of two reaching movements of opposite motor cost, and to perform the selected reaching movement. Reward was contingent upon target arrival precision. All trials had fixed duration to prevent the participants from maximizing reward by minimizing temporal discount.

We manipulated the participants’ motivated state via social pressure. Each experimental session was composed of six blocks, during which subjects could either play alone or accompanied by a simulated co-player. Within this illusion, the amount of reward obtained by the participant and by their companion was reported at the end of each trial. The previous ten trial ranking for the two players was shown briefly every nine trials. However, no specific mention to competition was ever made to the subjects in the instruction, and any such mention reported by the participant was immediately rejected by the experimenter.

The results show that participants increased precision alongside the skill of their co-actor, implying that the participants cared about their own performance. The main behavioural result was an increase of the movement duration between baseline (playing alone) and any other condition (with any co-actor), and a modulation of amplitude as the skill of the co-actor became unattainable. As to provide a quantitative account of the dynamics of social motivation, we developed a generative computational model of decision-making and motor control, based on the optimization of the trade-off between the benefits and costs associated to a movement. Its predictions show that this optimization depends on the motivational context where the movements and the choices between them are performed. Although further research remains to be performed to understand the specific intricacies of this relationship between motor control theory and motivated states, this suggests that this inter-relation between internal physiological dynamics and motor behaviour is more than a simple modulation of the vigour of movement.

Acknowledgements: This project was funded by the Marie Sklodowska-Curie Research Grant Scheme (grant number IF-656262).

P67 Functional inference of real neural networks with artificial neural networks

Mohamed Bahdine1, Simon V. Hardy2, Patrick Desrosiers3

1Laval University, Quebec, Canada; 2Laval University, Département d’informatique et de génie logiciel, Quebec, Canada; 3Laval University, Département de physique, de génie physique et d’optique, Quebec, Canada

Correspondence: Mohamed Bahdine (mohamed.bahdine.1@ulaval.ca)

BMC Neuroscience 2019, 20(Suppl 1):P67

Fast extraction of connectomes from whole-brain functional imaging is computationally challenging. Despite the development of new algorithms that efficiently segment the neurons in calcium imaging data, the detection of individual synapses in whole-brain images remains intractable. Instead, connections between neurons are inferred using time series that describe the evolution of neurons’ activity. We compare classical methods of functional inference such as Granger Causality (GC) and Transfer Entropy (TE) to deep learning approaches such as Convolutional Neural Networks (CNN) and Long Short-Term Memory (LSTM).

Since ground truth is required to compare the methods, synthetic time series are generated from the C. Elegans’ connectome using the leaky-integrate and fire neuron model. Noise, inhibition and adaptation are added to the model to promote richer neuron activity. To mimic typical calcium-imaging data, the time series are down-sampled from 10 kHz to 30 Hz and filtered with calcium and fluorescence dynamics. Additionally, we produce multiple simulations by varying brain and stimulation parameters to test each inference methods on different types of brain activity.

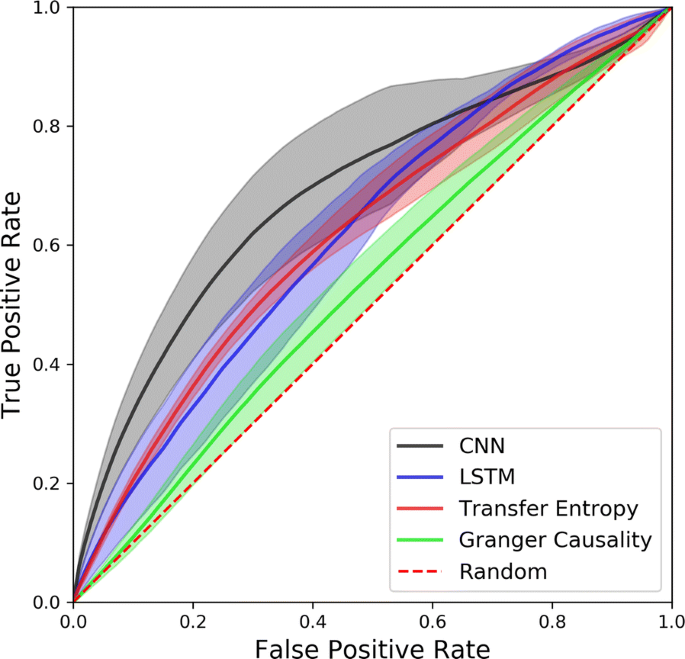

By comparing the mean ROC curves of each method (see Fig. 1) we find that the CNN outperforms all other methods up to a false positive rate of 0.7, while GC has the weakest performance, being on average slightly above random guesses. TE performs better than LSTM for low false positive rates, but these performances are inverted for false positive rates higher than 0.5. Although the CNN has the highest mean curve, it also has the largest width, meaning the CNN is the most variable and therefore least consistent inference method. TE’s mean ROC curve’s width is significantly narrower than other methods for low false positive rates and slowly grows when it meets other curves. The choice of an inference method is therefore dependant on one’s tolerance to false positives and variability.

P68 Stochastic axon systems: A conceptual framework

Skirmantas Janusonis1, Nils-Christian Detering2

1University of California, Santa Barbara, Department of Psychological and Brain Sciences, Santa Barbara, CA, United States of America; 2University of California, Santa Barbara, Department of Statistics and Applied Probability, Santa Barbara, CA, United States of America

Correspondence: Skirmantas Janusonis (janusonis@ucsb.edu)

BMC Neuroscience 2019, 20(Suppl 1):P68

The brain contains many “point-to-point” projections that originate in known anatomical locations, form distinct fascicles or tracts, and terminate in well-defined destination sites. This “deterministic brain” coexists with the “stochastic brain,” the axons of which disperse in meandering trajectories, creating meshworks in virtually all brain nuclei and laminae. The cell bodies of this system are typically located in the brainstem, as a component of the ascending reticular activating system (ARAS). ARAS axons (fibers) release serotonin, dopamine, norepinephrine, acetylcholine, and other neurotransmitters that regulate perception, cognition, and affective states. They also play major roles in human mental disorders (e.g., Major Depressive Disorder and Autism Spectrum Disorder).

Our interdisciplinary program [1, 2] seeks to understand at a rigorous level how the behavior of individual ARAS fibers determines their equilibrium densities in brain regions. These densities are commonly used in fundamental and applied neuroscience and can be thought to represent a macroscopic measure that has a strong spatial dependence (conceptually similar to temperature in thermodynamics). This measure provides essential information about the environment neuronal ensembles operate in, since ARAS fibers are present in virtually all brain regions and achieve extremely high densities in many of them.

A major focus of our research is the identification of the stochastic process that drives individual ARAS trajectories. Fundamentally, it bridges the stochastic paths of single fibers and the essentially deterministic fiber densities in the adult brain. Building upon state-of-the-art microscopic analyses and theoretical models, the project investigates whether the observed fiber densities are the result of self-organization, with no active guidance by other cells. Specifically, we hypothesize that the knowledge of the geometry of the brain, including the spatial distribution of physical “obstacles” in the brain parenchyma, provides key information that can be used to predict regional fiber densities.

In this presentation, we focus on serotonergic fibers. We demonstrate that a step-wise random walk, based on the von Mises-Fisher (directional) probability distribution, can provide a realistic and mathematically concise description of their trajectories in fixed tissue. Based on the trajectories of serotonergic fibers in 3D-confocal microscopy images, we present estimates of the concentration parameter (κ) in several brain regions with different fiber densities. These estimates are then used to produce computational simulations that are consistent with experimental results. We also propose that other stochastic models, such as the superdiffusion regime of the Fractional Brownian Motion (FBM), may lead to a biologically accurate and analytically rich description of ARAS fibers, including their temporal dynamics.

Acknowledgements: This research is funded by the National Science Foundation (NSF 1822517), the National Institute of Mental Health (R21 MH117488), and the California NanoSystems Institute (Challenge-Program Development Grant).

References

- 1.Janusonis S, Detering N. A stochastic approach to serotonergic fibers in mental disorders. Biochimie 2018, in press.

- 2.Janusonis S, Mays KC, Hingorani MT. Serotonergic fibers as 3D-walks. ACS Chem. Neurosci. 2019, in press.

P69 Replicating the mouse visual cortex using Neuromorphic hardware

Srijanie Dey, Alexander Dimitrov

Washington State University Vancouver, Mathematics and Statistics, Vancouver, WA, United States of America

Correspondence: Srijanie Dey (srijanie.dey@wsu.edu)

BMC Neuroscience 2019, 20(Suppl 1):P69

The primary visual cortex is one of the most complex parts of the brain offering significant modeling challenges. With the ongoing development of neuromorphic hardware, simulation of biologically realistic neuronal networks seems viable. According to [1], Generalized Leaky Integrate and Fire Models (GLIFs) are capable of reproducing cellular data under standardized physiological conditions. The linearity of the dynamical equations of the GLIFs also work to our advantage. In an ongoing work, we proposed the implementation of five variants of the GLIF model [1], incorporating different phenomenological mechanisms, into Intel’s latest neuromorphic hardware, Loihi. Owing to its architecture that supports hierarchical connectivity, dendritic compartments and synaptic delays, the current LIF hardware abstraction in Loihi is a good match to the GLIF models. In spite of that, precise detection of spikes and the fixed-point arithmetic on Loihi pose challenges. We use the experimental data and the classical simulation of GLIF as references for the neuromorphic implementation. Following the benchmark in [2], we use various statistical measures on different levels of the network to validate and verify the neuromorphic network implementation. In addition, variance among the models and within the data based on spike times are compared to further support the network’s validity [1, 3]. Based on our preliminary results, viz., implementation of the first GLIF model followed by a full-fledged network in the Loihi architecture, we believe it is highly probable that a successful implementation of a network of different GLIF models could lay the foundation for replicating the complete primary visual cortex.

References

- 1.Teeter C, Iyer R, Menon V, et al. Generalized leaky integrate-and-fire models classify multiple neuron types. Nature communications 2018 Feb 19;9(1):709.

- 2.Trensch G, Gutzen R, Blundell I, Denker M, Morrison A. Rigorous neural network simulations: a model substantiation methodology for increasing the correctness of simulation results in the absence of experimental validation data. Frontiers in Neuroinformatics 2018;12.

- 3.Paninski L, Simoncelli EP, Pillow JW. Maximum likelihood estimation of a stochastic integrate-and-fire neural model. Advances in Neural Information Processing Systems 2004; pp 1311–1318.

P70 Understanding modulatory effects on cortical circuits through subpopulation coding

Matthew Getz1, Chengcheng Huang2, Brent Doiron2

1University of Pittsburgh, Neuroscience, Pittsburgh, PA, United States of America; 2University of Pittsburgh, Mathematics, Pittsburgh, United States of America

Correspondence: Matthew Getz (mpg39@pitt.edu)

BMC Neuroscience 2019, 20(Suppl 1):P70

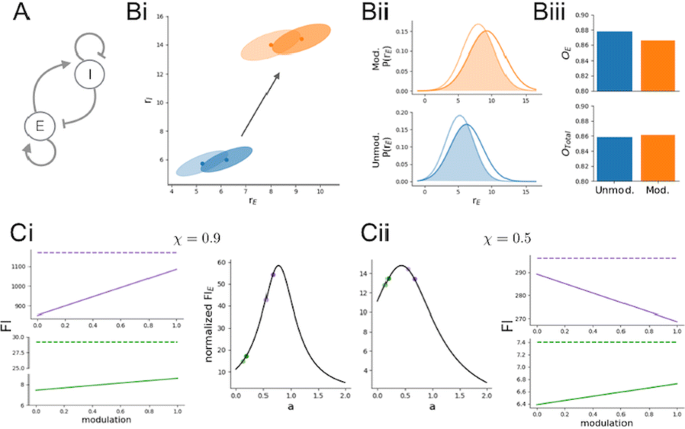

Information theoretic approaches have shed light on the brain’s ability to efficiently propagate information along the cortical hierarchy, as well as exposed limitations in this process. One common measure of coding capacity, linear Fisher Information (FI), has also been used to study the neural code within a given cortical region. In particular, we recently used this approach to study the effects of an attention-like modulation on a cortical population model [1]. Previous studies have been largely agnostic as to the class of neuron that encodes a particular sensory variable, assuming little more than stimulus tuning properties. While it is widely accepted that local cortical dynamics involve an interplay between excitatory and inhibitory neurons, there are a large number of anatomical studies showing that excitatory neurons are the dominant projection neurons from one cortical area to the next. This suggests that, rather than maximizing the FI across the full excitatory and inhibitory network, to improve down-stream readout of neural codes the goal of top-down modulation may instead be to modulate the information carried only within the excitatory population, denoted FI-E [1]. In this study we explore this hypothesis using a combined numerical and analytic analysis of population coding in simplified model cortical networks.

We first study this effect in a recurrently coupled, excitatory (E)/inhibitory (I) population pair coding for a scalar stimulus variable (Fig. 1, A). We demonstrate that while the FI of the full E/I network does not change with a top-down modulation (Fig. 1, C; dashed colored lines), FI-E can nevertheless increase (Fig. 1, C; solid colored lines). We derive intuition for this key difference between FI and FI-E by considering the combined influence of input correlation and recurrent connectivity (captured by the ratio a in Fig. 1, C, middle plots. Light points show the ratio a before modulation; dark points, after modulation. Green and purple correspond to two different sets of network parameters. Fig. 1, Ci corresponds to input correlations = 0.9; Cii, to input correlations = 0.5).

a Network schematic. b (i, ii) Distribution of firing rates for E and I before (blue) and after (orange) modulation at a given contrast c (light ellipse) and c+dc (dark ellipse) where dc is a small perturbation in the input. (iii) Calculated overlap of the rate distributions for E and E/I (Total). c The effects of modulation depend on the input correlations and recurrent connectivity

Finally, we will further extend these ideas to a distributed population code by considering a framework with multiple E/I populations encoding a periodic stimulus variable [2]. In total, our results develop a new framework in which to understand how top-down modulation may exert a positive effect on cortical population codes.

References

- 1.Kanashiro T, Ocker GK, Cohen MR, Doiron B. Attentional modulation of neuronal variability in circuit models of cortex. Elife 2017 Jun 7; 6:e23978.

- 2.Getz M P, Huang C, Dunworth J, Cohen M R, Doiron B. Attentional modulation of neural covariability in a distributed circuit-based population model. Cosyne Abstracts 2018, Denver, CO, USA.

P71 Stimulus integration and categorization with bump attractor dynamics

Jose M. Esnaola-Acebes1, Alex Roxin1, Klaus Wimmer1, Bharath C. Talluri2, Tobias Donner2,3

1Centre de Recerca Matemàtica, Computational Neuroscience group, Barcelona, Spain; 2University Medical Center Hamburg-Eppendorf, Department of Neurophysiology & Pathophysiology, Hamburg, Germany; 3University of Amsterdam, Department of Psychology, Amsterdam, The Netherlands

Correspondence: Jose M. Esnaola-Acebes (jmesnaola@crm.cat)

BMC Neuroscience 2019, 20(Suppl 1):P71

Perceptual decision making often involves making categorical judgments based on estimations of continuous stimulus features. It has recently been shown that committing to a categorical choice biases a subsequent report of the stimulus estimate by selectively increasing the weighting of choice-consistent evidence [1]. This phenomenon, known as confirmation bias, commonly results in a suboptimal performance in people’s perceptual decisions. The underlying neural mechanisms that give rise to this phenomenon are still poorly understood.

Here we develop a computational network model that can integrate a continuous stimulus feature such as motion direction and can also account for a subsequent categorical choice. The model, a ring attractor network, represents the estimate of the integrated stimulus direction in the phase of an activity bump. A categorical choice can then be achieved by applying a decision signal at the end of the trial forcing the activity bump to move to one of two opposite positions. We reduced the network dynamics to a two-dimensional equation for the amplitude and the phase of the bump which allows for studying evidence integration analytically. The model can account for qualitatively distinct decision behaviors, depending on the relative strength of sensory stimuli compared to the amplitude of the bump attractor. When sensory inputs dominate over the intrinsic network dynamics, later parts of the stimulus have a higher impact on the final phase and the categorical choice than earlier parts (“recency” regime). On the other hand, when the internal dynamics are stronger, the temporal weighting of stimulus information is uniform. The corresponding psychophysical kernels are consistent with experimental observations [2]. We then simulated how stimulus estimation is affected by an intermittent categorical choice [1] by applying the decision signal after the first half of the stimulus. We found that this biases the resulting stimulus estimate at the end of the trial towards larger values for stimuli that are consistent with the categorical choice and towards smaller values for stimuli that are inconsistent, resembling the experimentally observed confirmation bias.

Our work suggests bump attractor dynamics as a potential underlying mechanism of stimulus integration and perceptual categorization.

Acknowledgments: Funded by the Spanish Ministry of Science, Innovation and Universities and the European Regional Development Fund (grants RYC-2015-17236, BFU2017-86026-R and MTM2015-71509-C2-1-R) and by the Generalitat de Catalunya (grant AGAUR 2017 SGR 1565).

References

- 1.Talluri BC, Urai AE, Tsetsos K, Usher M, Donner TH. Confirmation bias through selective overweighting of choice-consistent evidence. Current Biology 2018 Oct 8;28(19):3128–35.

- 2.Wyart V, De Gardelle V, Scholl J, Summerfield C. Rhythmic fluctuations in evidence accumulation during decision making in the human brain. Neuron 2012 Nov 21;76(4):847–58.

P72 Topological phase transitions in functional brain networks

Fernando Santos1, Ernesto P Raposo2, Maurício Domingues Coutinho-Filho2, Mauro Copelli2, Cornelis J Stam3, Linda Douw4

1Universidade Federal de Pernambuco, Departamento de Matemática, Recife, Brazil; 2Universidade Federal de Pernambuco, Departamento de Física, Recife, Brazil; 3Vrije University Amsterdam Medical Center, Department of Clinical Neurophysiology and MEG Center, Amsterdam, Netherlands; 4Vrije University Amsterdam Medical Center, Department of Anatomy & Neurosciences, Amsterdam, Netherlands

Correspondence: Fernando Santos (fansantos@dmat.ufpe.br)

BMC Neuroscience 2019, 20(Suppl 1):P72

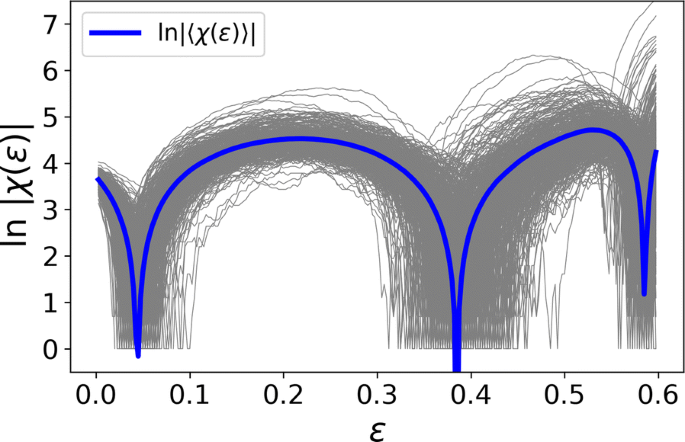

Functional brain networks are often constructed by quantifying correlations between time series of activity of brain regions. Their topological structure includes nodes, edges, triangles and even higher-dimensional objects. Topological data analysis (TDA) is the emerging framework to process datasets under this perspective. In parallel, topology has proven essential for understanding fundamental questions in physics. Here we report the discovery of topological phase transitions in functional brain networks by merging concepts from TDA, topology, geometry, physics, and network theory. We show that topological phase transitions occur when the Euler entropy has a singularity, which remarkably coincides with the emergence of multidimensional topological holes in the brain network, as illustrated in Fig. 1. The geometric nature of the transitions can be interpreted, under certain hypotheses, as an extension of percolation to high-dimensional objects. Due to the universal character of phase transitions and noise robustness of TDA, our findings open perspectives towards establishing reliable topological and geometrical markers for group and possibly individual differences in functional brain network organization.

P73 A whole-brain spiking neural network model linking basal ganglia, cerebellum, cortex and thalamus

Carlos Gutierrez1, Jun Igarashi2, Zhe Sun2, Hiroshi Yamaura3, Tadashi Yamazaki4, Markus Diesmann5, Jean Lienard1, Heidarinejad Morteza2, Benoit Girard6, Gordon Arbuthnott7, Hans Ekkehard Plesser8, Kenji Doya1

1Okinawa Institute of Science and Technology, Neural Computation Unit, Okinawa, Japan; 2Riken, Computational Engineering Applications Unit, Saitama, Japan; 3The University of Electro-Communications, Tokyo, Japan; 4The University of Electro-Communications, Graduate School of Informatics and Engineering, Tokyo, Japan; 5Jülich Research Centre, Institute of Neuroscience and Medicine (INM-6) & Institute for Advanced Simulation (IAS-6), Jülich, Germany; 6Sorbonne Universite, UPMC Univ Paris 06, CNRS, Institut des Systemes Intelligents et de Robotique (ISIR), Paris, France; 7Okinawa Institute of Science and Technology, Brain Mechanism for Behaviour Unit, Okinawa, Japan; 8Norwegian University of Life Sciences, Faculty of Science and Technology, Aas, Norway

Correspondence: Carlos Gutierrez (carlos.gutierrez@oist.jp)

BMC Neuroscience 2019, 20(Suppl 1):P73

The neural circuit linking the basal ganglia, the cerebellum and the cortex through the thalamus plays an essential role in motor and cognitive functions. However, how such functions are realized by multiple loop circuits with neurons of multiple types is still unknown. In order to investigate the dynamic nature of the whole-brain network, we built biologically constrained spiking neural network models of the basal ganglia [1, 2, 3], cerebellum, thalamus, and the cortex [4, 5] and ran an integrated simulation on K supercomputer [8] using NEST 2.16.0 [6, 7, 9].

We replicated resting state activities of 1 biological second of time in models with increasing scales, from 1x1mm2 to 9x9mm2 of cortical surface, the latter of which includes 35 million neurons and 66 billion synapses in total. Simulations using a hybrid parallelization approach showed a good weak scaling performance in simulation time lasting between 15–30 minutes, but identified a problem of long time (between 6–9 hours) required for network building.

We also evaluated the properties of action selection with realistic topographic connections in the basal ganglia circuit in 2-D target reaching task and observed selective activation and inhibition of neurons in preferred directions in every nucleus leading to the output. Moreover, we performed tests of reinforcement learning based on dopamine-dependent spike-timing dependent synaptic plasticity.

References

- 1.Liénard J, Girard B. A biologically constrained model of the whole basal ganglia addressing the paradoxes of connections and selection. Journal of computational neuroscience 2014 Jun 1;36(3):445–68.

- 2.Liénard J, Girard B, Doya K, et al. Action selection and reinforcement learning in a Basal Ganglia model. In Eighth International Symposium on Biology of Decision Making 2018 (Vol. 6226, pp. 597–606). Springer.

- 3.Gutierrez CE, et al. Spiking neural network model of the basal ganglia with realistic topological organization. Advances in Neuroinformatics 2018, https://doi.org/10.14931/aini2018.ps.15 [Poster].

- 4.Igarashi J, Moren K, Yoshimoto J, Doya K. Selective activation of columnar neural population by lateral inhibition in a realistic model of primary motor cortex. In Neuroscience 2014, the 44th Annual Meeting of the Society for Neuroscience (SfN 2014) Nov 15th. [Poster].

- 5.Zhe S, Igarashi J. A Virtual Laser Scanning Photostimulation Experiment of the Primary Somatosensory Cortex. In The 28th Annual Conference of the Japanese Neural Network Society 2018 Oct (pp. 116). Okinawa Institute of Science and Technology.

- 6.Gewaltig MO, Diesmann M. Nest (neural simulation tool). Scholarpedia 2007 Apr 5;2(4):1430.

- 7.Linssen C, et al. NEST 2.16.0. Zenodo 2018. https://doi.org/10.5281/zenodo.1400175.

- 8.Miyazaki H, Kusano Y, Shinjou N, et al. Overview of the K computer system. Fujitsu Sci. Tech. J. 2012 Jul 1;48(3):302–9.

- 9.Jordan J, Ippen T, Helias M, et al. Extremely scalable spiking neuronal network simulation code: from laptops to exascale computers. Frontiers in neuroinformatics 2018 Feb 16;12:2.

No hay comentarios:

Publicar un comentario